Many organizations have multiple applications that are being built independently as a microservices-based platform. By its nature, a microservice platform is a distributed system running on multiple processes or services, across multiple servers or hosts. Building a successful platform on top of microservices requires integrating these disparate services and systems to produce a unified set of functionality.

It is naïve to think that we can only choose one communication style to solve all problems; if integration needs were always the same, there would be only one style and we would all be happy with it. In reality, a single service can can communicate using many different styles, each one targeting a different scenario and goals. This challenge is what brings me to the topic of this blog post: when and why do we use different communication standards?

Communication Types

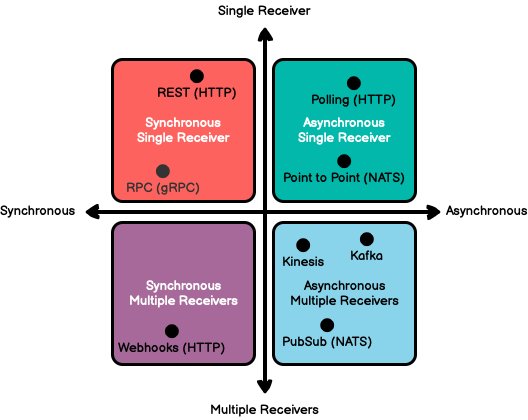

Following Microsoft’s guidance on communication in a microservice architecture, we can begin by classifying the available communication types along two axes. The first axis defines synchronous versus asynchronous communication. The second axis defines the number of receivers of this message.

You can use several different technologies and implementations to support synchronous and asynchronous communication to single or multiple receivers. By placing those communication systems on our axes it gives us a quick overview of the available communication types and when they would be used. As a first step to connecting services together, first get familiar with the options at your disposal. The following diagram shows some common communication technologies and where they fit within the spectrum:

Service Independence

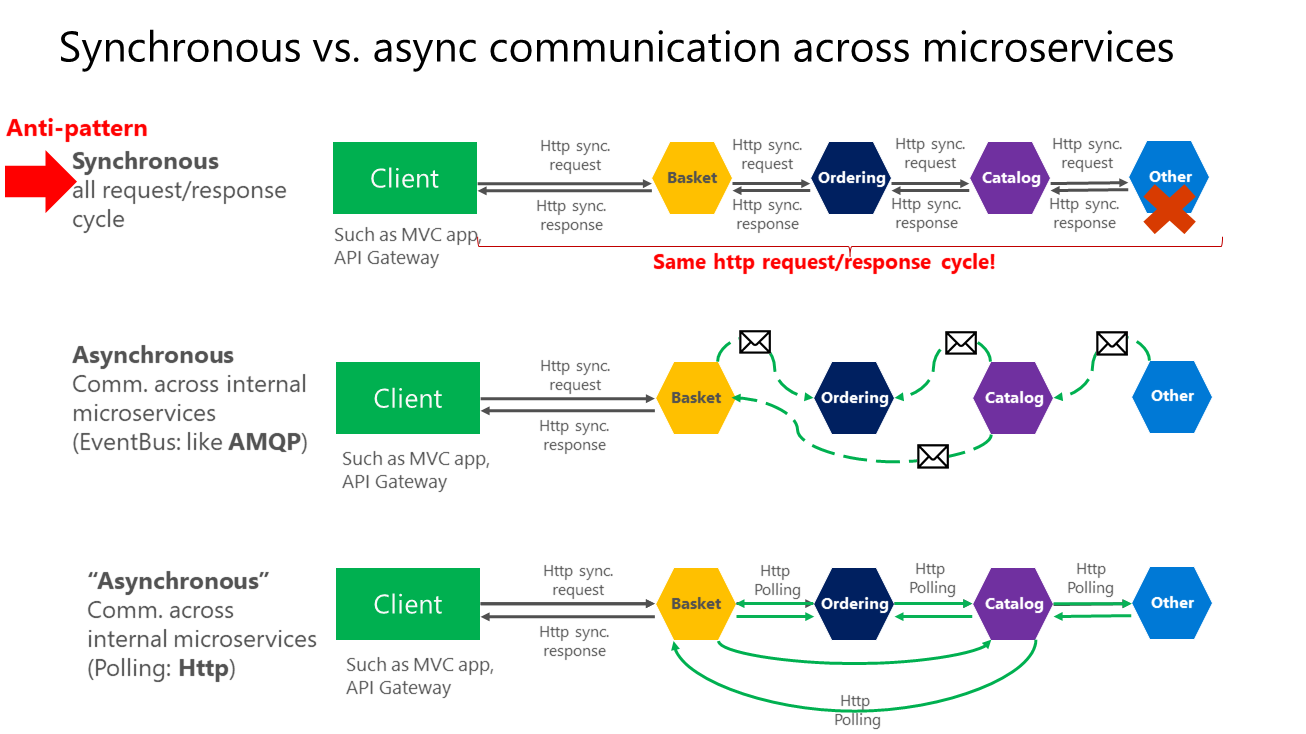

These axes are good to know so you have clarity on the possible communication types, but focusing too tightly on individual technologies risks missing the forest for the trees. The communication type may not be the most important concern when building microservices. What is important is being able to integrate while maintaining the independence of microservices. Rather than worrying about which communication type to use, strive to minimize both the breadth and depth of communication between microservices altogether. The fewer communications between microservices, the better. When you do need to communicate, the critical rule to follow is to avoid chaining multiple synchronous calls between microservices. That doesn’t mean that you have to use a specific protocol (for example, NATS or HTTP). It just means that the communication between microservices should be done by propagating data in parallel, preferably asynchronously, rather than in a synchronous series. Microsoft’s Architecture Guide for .NET classifies chaining synchronous API calls an Anti-Pattern, as depicted in the following figure:

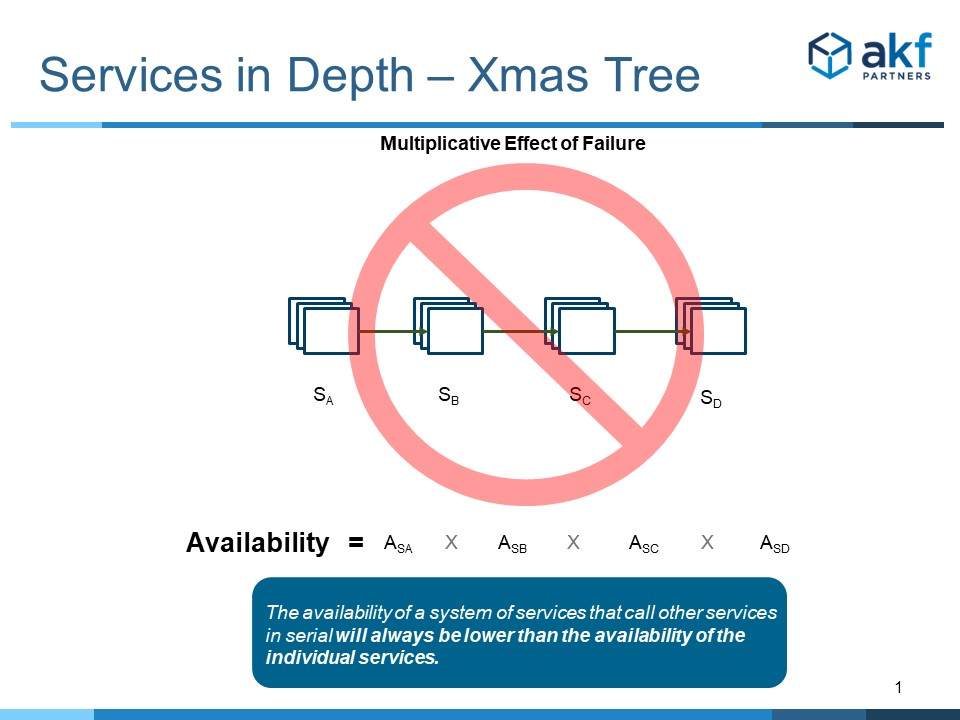

AKF Partner’s calls multiple connected synchronous calls the Christmas Tree Lights Anti-Pattern — if one bulb fails, the entire chain goes dark.

To address the challenges of API calls in series, there are a few patterns and guidelines that can help us.

Prefer Asynchronous Communication

RESTful HTTP and RPC tend to promote encapsulation by hiding the data structure of a service behind a well-defined API, eliminating the need for a large shared data structure between teams. Unfortunately, relying completely on RESTful HTTP or RPC means applications are still fairly tightly coupled together — the remote calls between services tend to tie the different systems into an ever-growing knot. These types of problems often arise because issues that aren’t significant within a single application become significant problems when integrating multiple applications together.

Asynchronous communication is fundamentally a pragmatic reaction to the problems of distributed systems. Sending a message does not require both systems both systems to be up and available at the same time. Furthermore, thinking about the communication in an asynchronous manner forces developers to recognize that working with a remote application is slower and prone to failure, which encourages design of components with high cohesion (lots of work locally) and low adhesion (selective work remotely).

Enterprise Integration Patterns

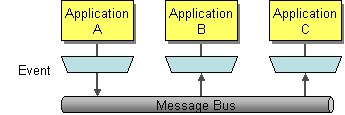

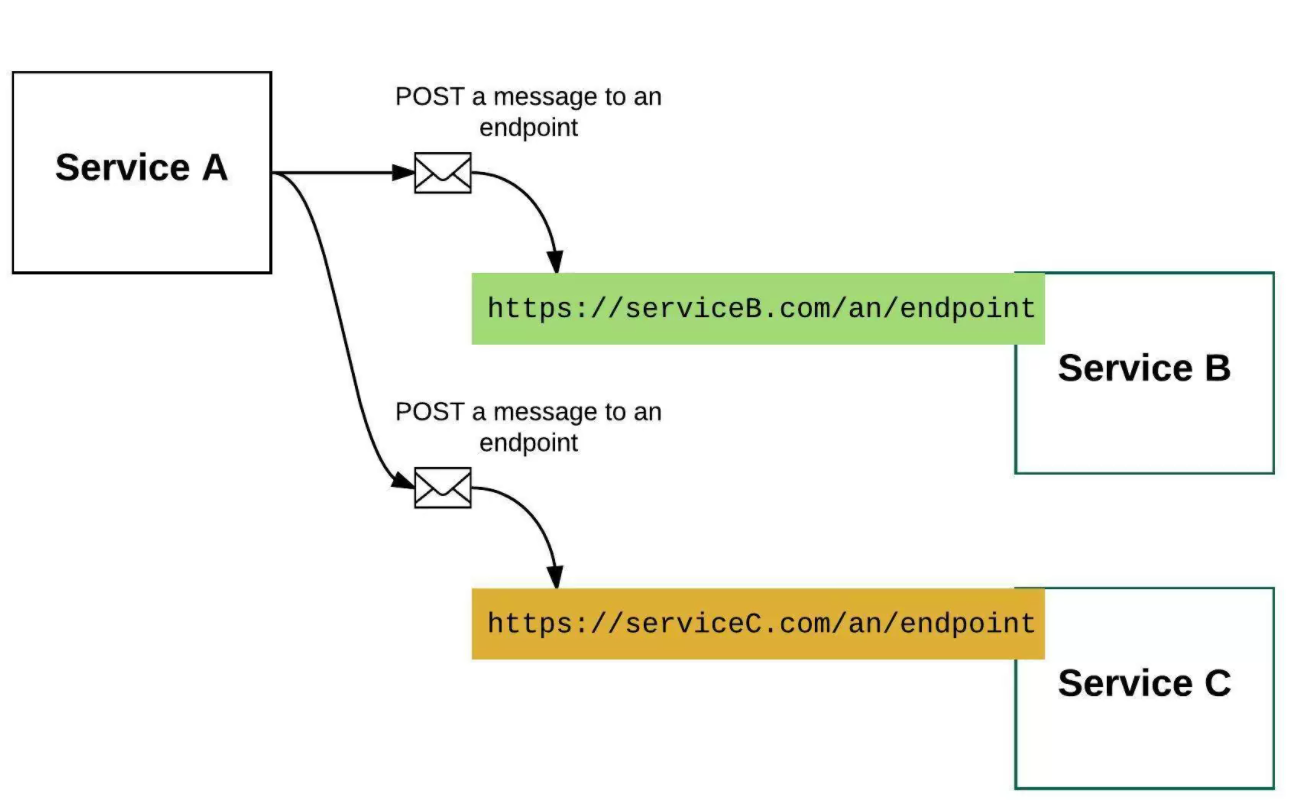

Interestingly, asynchronous communication can happen with or without a messaging system or message bus by leveraging web hooks or long-polling. For example, the following diagram from Enterprise Integration Patterns depicts a typical message bus facilitating communication between multiple services.

This system can be replicated using a technology typically associated with synchronous communication like HTTP by registering interesting subscribers with a callback URL and using a POST request to publish data to subscribers directly, ignoring the response. This diagram from Why Messaging Queues Suck shows an example of connecting multiple services using webhooks.

In both case, the key attribute of effective asynchronous communication is that the requesting service must not care whether it receives a response. This allows us to call multiples services in parallel, compose them, or orchestrate them without chaining them together in a long series. If your microservice needs to raise an additional action in another microservice, if possible, do it asynchronously (using asynchronous messaging or integration events, queues, etc.). But, as much as possible, do not invoke the action synchronously as part of the original synchronous request and reply operation.

You might use any protocol to communicate and propagate data asynchronously across microservices in order to have eventual consistency. It doesn’t matter. The important rule is to not create synchronous dependencies between your microservices.

Marrying Asynchronous Communication with a RESTful Platform

I mentioned at the beginning of this article that it is naïve to think that one communication style will satisfy all integration needs. A great example that is an important part of many companies’ strategy: RESTful HTTP APIs. REST is one of the most ubiquitous and widely used communication protocols in the world which makes it a key part of many organization’s initiatives.

If we prefer asynchronous communication, but require RESTful HTTP, how can the two co-exist together? My suggestion is to leverage RESTful HTTP at the boundaries to your system using an API Gateway or Backends for Frontends pattern. With these patterns, RESTful HTTP-based APIs serve as the entry point and interaction point between logical boundaries within the system. These APIs should be well-defined, clearly documented, stable, and suitable for both internal and external use.

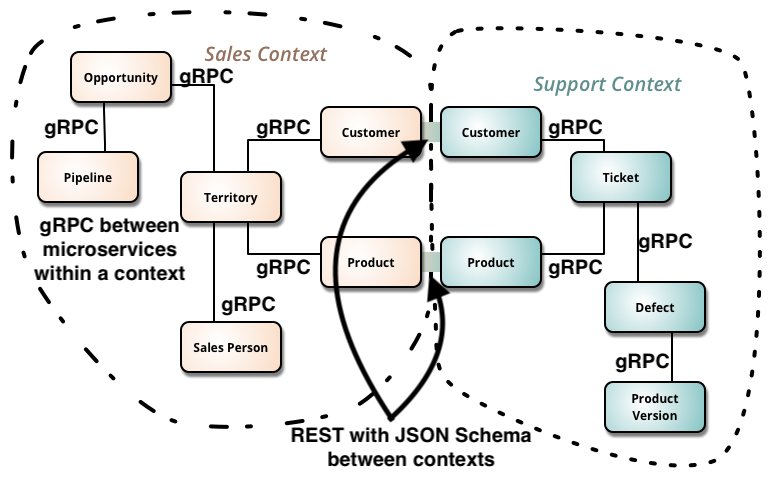

The following example, from APIs You Won’t Hate, shows how the boundaries between two different systems interact over a clear and stable RESTful API, while the individual microservices within each boundary leverage an RPC protocol.

Things within the context can treat their own APIs like “private classes” in programming languages, they can change whenever they want, spin up and down, delete, evolve, change, who cares. When going to another context … [we should] probably use things like REST (with Hypermedia and JSON Schema) to help those clients last longer without needing developer involvement for most changes.

A key point in the diagram is that both systems have services that represent the same concept (Customer and Product). The DRY purists among us might scoff at this or want to fix it. Resist this urge! Instead, treat these points of commonality as the integration points between two systems. Each system is free to modify their view of what a Customer or Product is without affecting the other system, and they can synchronize this view as needed through their respective APIs.

With this model, service communication internal to your system can use a flexible choice of patterns and protocols, but when you are starting to expose APIs to developers that you are not in close communication with (and that may also have other priorities), the additional level of abstraction of an HTTP-based API becomes a lot more useful.

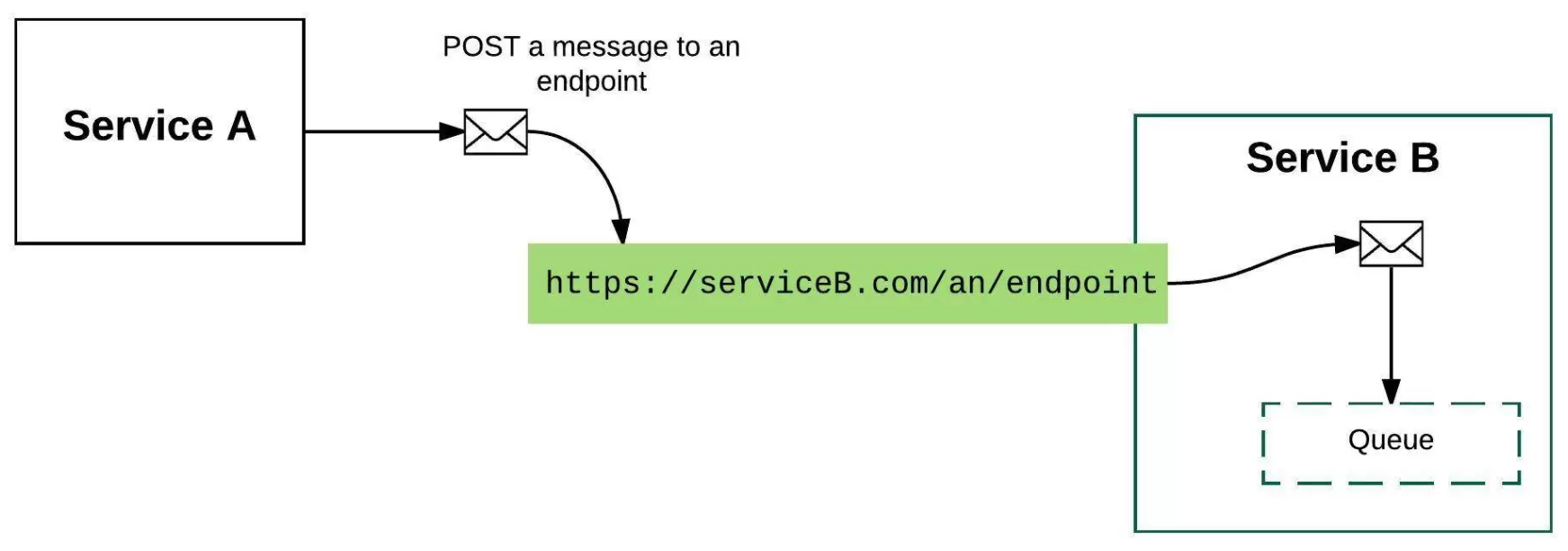

The Place for Queues is in the Consumer

Now what about queueing? Queues have many uses, but leveraging them as a global message bus might not be one of them. When integrating with services, you can treat your job as delivering information. It is the service’s job to consume it. Queues are a detail of the consumer, as depicted by this diagram from Why Messaging Queues Suck shows an example of connecting multiple services using webhooks.

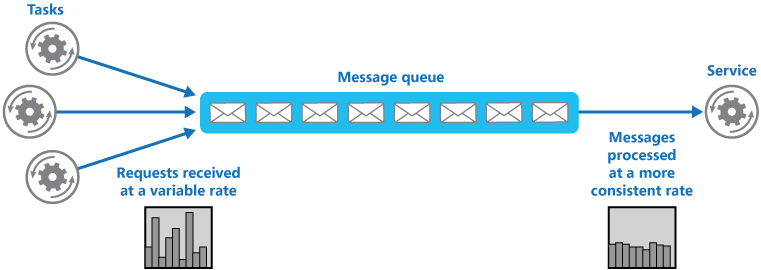

In this case, a queue acts as a buffer between the API that you expose and the service that processes the data. The queue helps to smooth intermittent heavy loads that can cause the service to fail or the task to time out. This can help to minimize the impact of peaks in demand on availability and responsiveness for both the API and the service. The queue decouples the tasks from the service, and the service can handle the messages at its own pace regardless of the volume of requests from concurrent tasks.

Leveraging a queue to help with message consumption is a great example of leveraging asynchronous design that also helps a system scale to process multiple messages concurrently, optimizing throughput, improving scalability and availability, and balancing the workload. Microsoft calls this pattern Queue-Based Load Leveling

Events Go in an Event Stream

In an event-driven system, events are delivered in near real time, so consumers can respond immediately to events as they occur. Producers are decoupled from consumers — a producer doesn’t know which consumers are listening. Consumers are also decoupled from each other, and every consumer sees all of the events. This scenario differs slightly from queueing, where consumers pull messages from a queue and a message is processed by a single consumer.

Event-driven systems are best used in few different scenarios:

- You have multiple consumers that each must process the same set of events.

- You have a need for real-time processing with minimum time lag.

- You have a complex event processing, such as pattern matching or aggregation over time windows.

- You are building an event sourced application where events, rather than data, are the source of truth for application state.

The downside to event-driven systems are that they are typically more complex than simple CRUD applications; you need to decide if the extra complexity is worth the benefit.

Event-driven systems can be built over either a publish-subscribe model or an event stream model:

- Publish-subscribe messaging with guaranteed delivery: The messaging infrastructure keeps track of subscriptions. When an event is published, it sends the event to each subscriber. After an event is received, it cannot be replayed, and new subscribers do not see the event.

- Event streaming: Events are written to a log. Events are strictly ordered (within a partition) and durable. Clients don’t subscribe to the stream, instead a client can read from any part of the stream. The client is responsible for advancing its position in the stream. That means a client can join at any time, and can replay events.

For consumers of events, integration is done by subscribing to the pub/sub topic or event stream of interest, leading to another asynchronous communication pattern: listening to a stream of events.

Putting it All Together — A Short Example

Phew! If you are keeping track, we’ve covered synchronous communication using REST and RPC and asynchronous communication using a message bus, queueing, and event streams. We also talked about how, in practice, you need more than one of these communication types to build a full-featured service. So how do you choose? We’ve covered some guidelines already, but it is useful to look at a practical example of how you can structure your application to take advantage of the different communication options. This example begins as you should, developing your application as a single service and later creating multiple services as complexity increases.

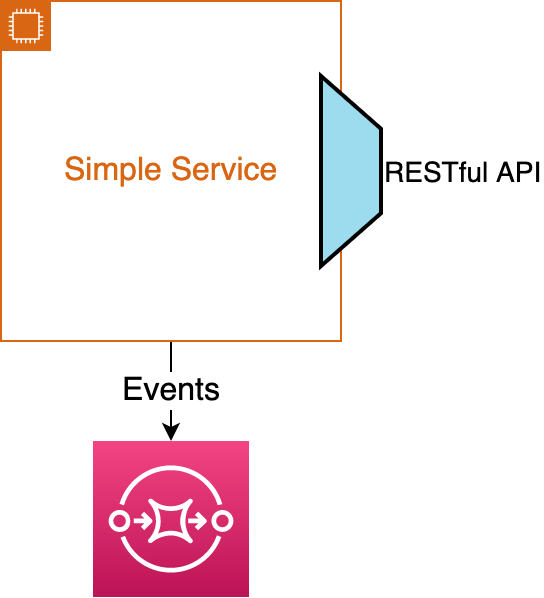

A Simple RESTful Service

The starting point to thinking about communication is beginning with a single service. In this case, the best path forward is to create a simple RESTful API that matches our external API standards and use that as the API for your service. Choosing a RESTful API, rather than an RPC framework, allows us to accelerate the creation of APIs that our third-party customers can leverage. Optionally, changes to your local application state can be output to an event stream to allow other teams to update their systems asynchronously.

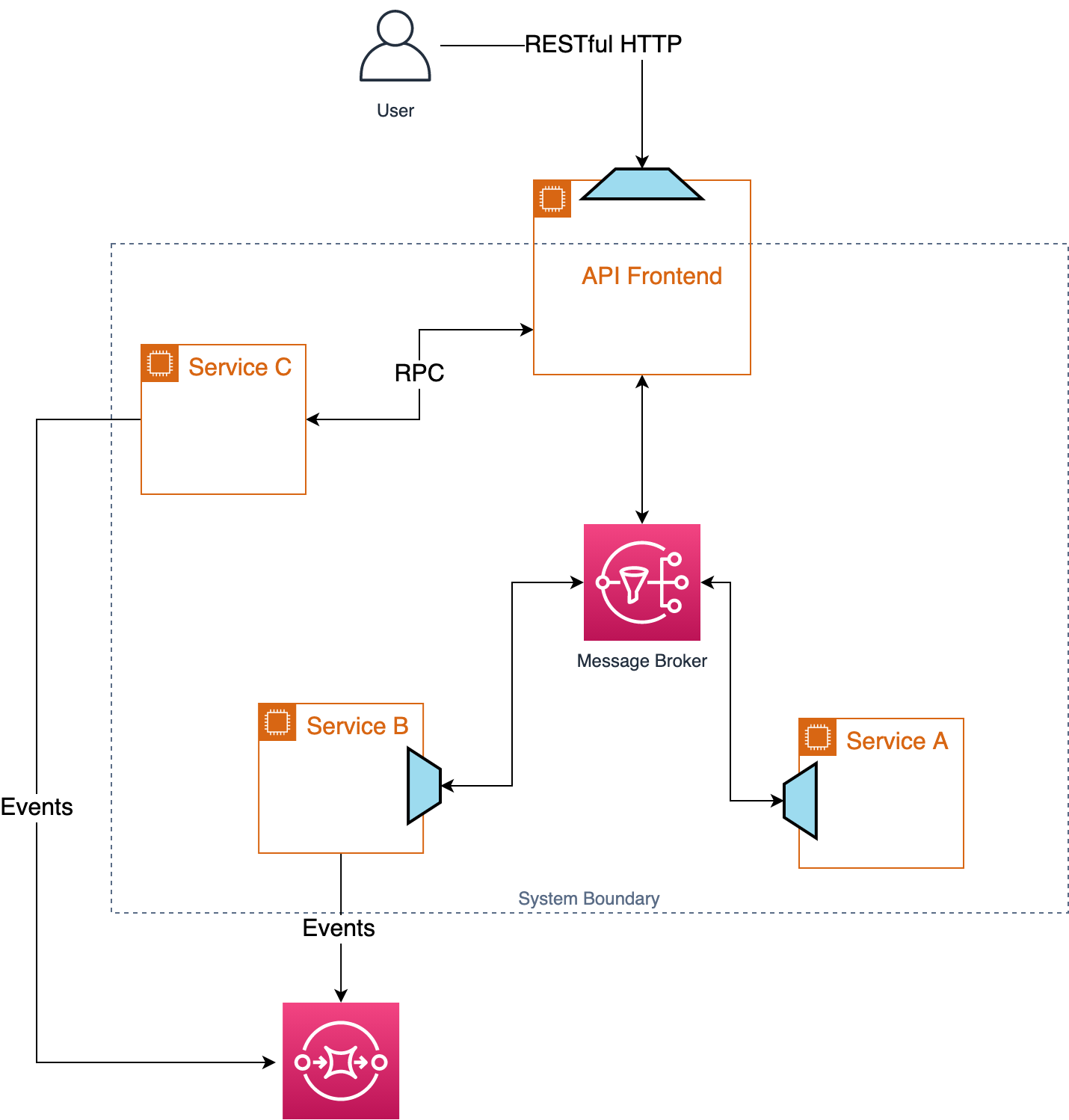

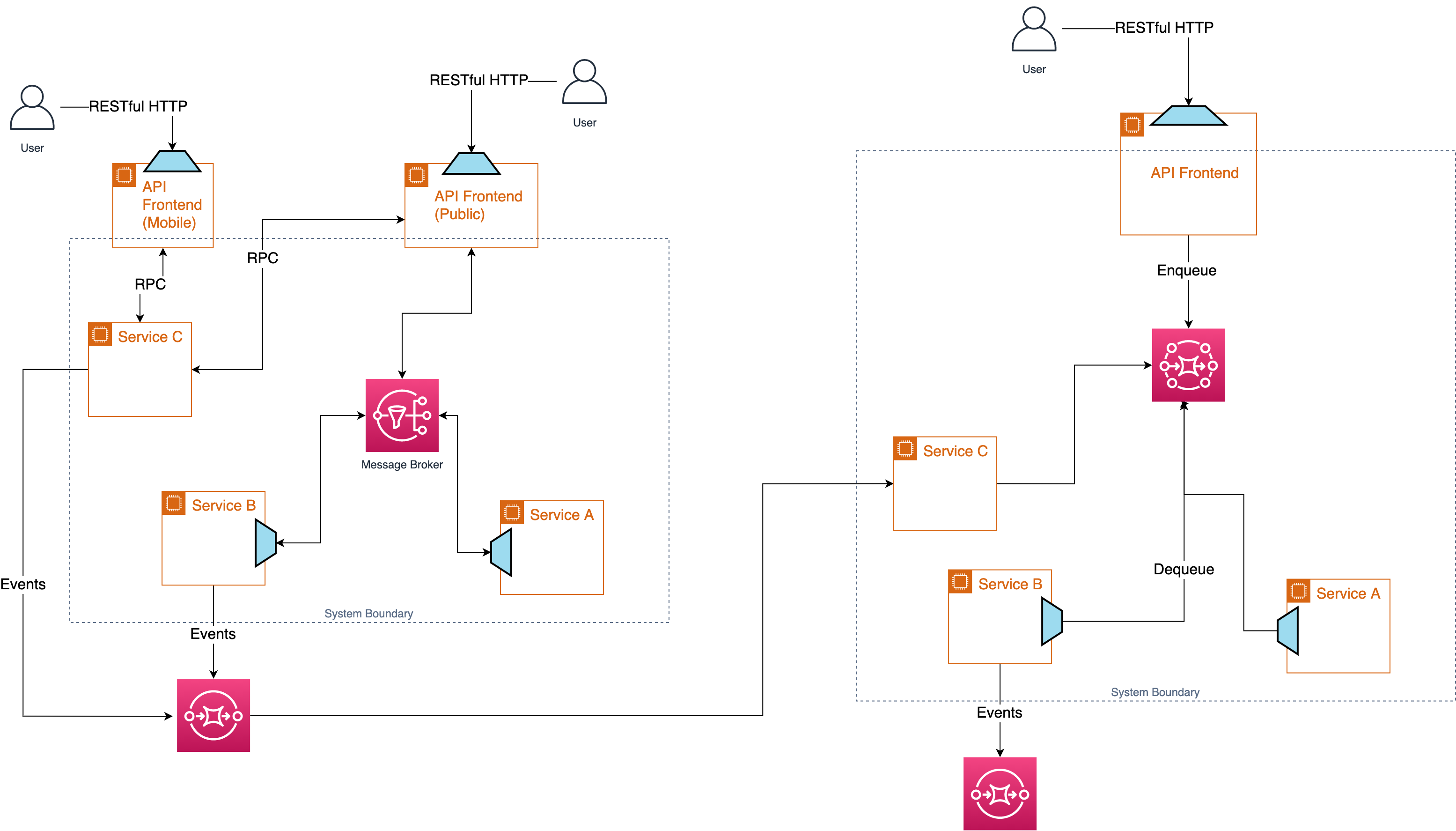

Multiple Services

As your service grows, you may choose to split your RESTful service into multiple microservices. Congratulations! You now have a distributed system! This means you have to prepare for an additional set of challenges and you should begin favouring asynchronous communication between services. Going fully asynchronous limits our ability to release third-party APIs so the correct approach here is to keep your RESTful API you created in your single service and use that as a frontend into the boundary of your system. As far as developers outside of your squad are concerned, the only API to your system is your RESTful frontend and your event stream — exactly as though it was a single service.

Within your system boundary, you may choose to organize your microservices in ways that make sense to your team — including leveraging asynchronous communication via a message broker or an RPC framework like Frugal. They key thing to keep in mind is to maintain a stable frontend API into your system — strive to avoid leaking internal details as much as possible.

The event stream you have in place still gives other developers a way to asynchronously receive updates to any application state.

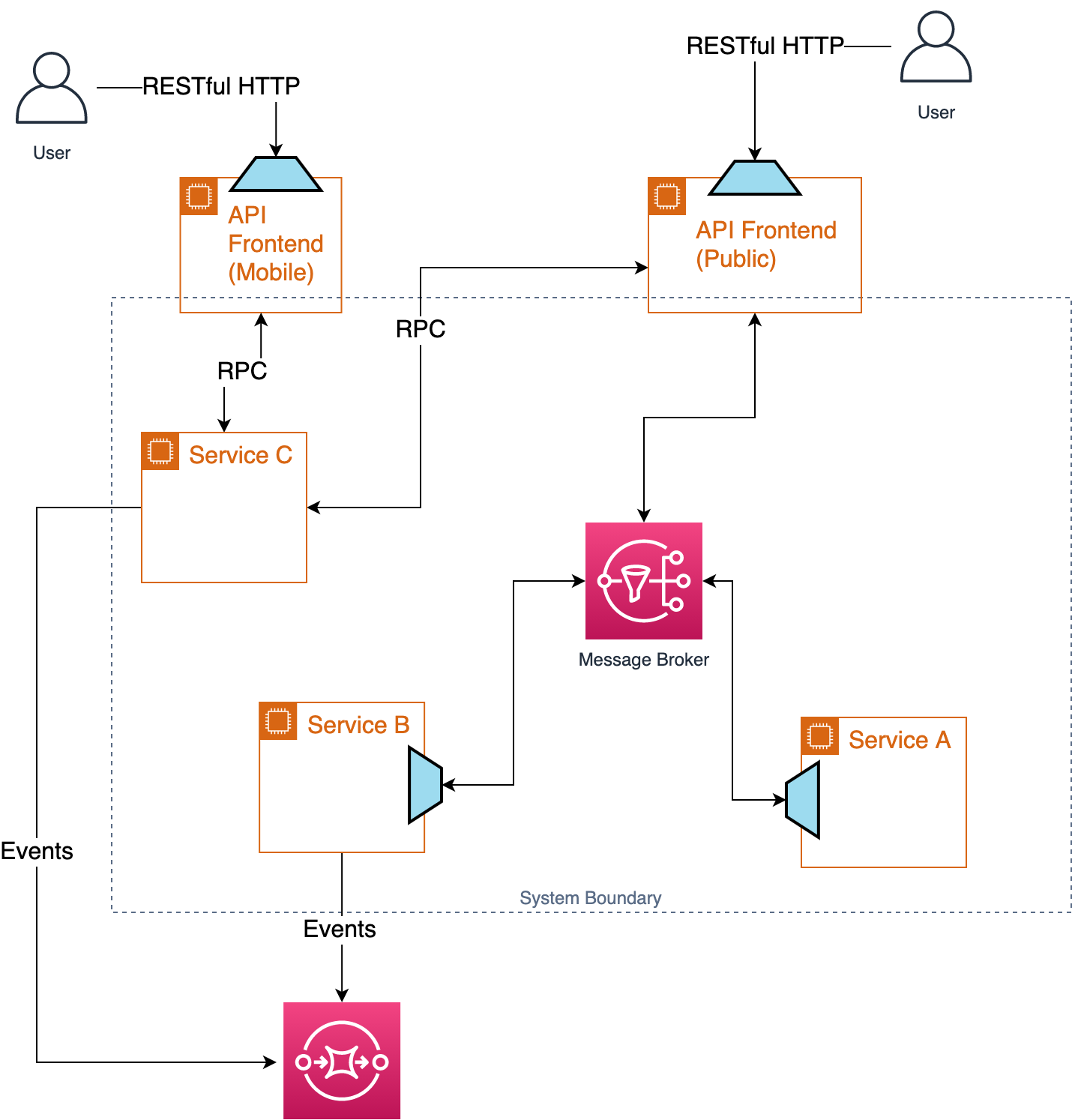

Multiple Consumers

Although we have a nice RESTful API, it is unlikely the same API will be useful to the browser, other backend services, third-party customers, and a mobile application all at the same time. If you are faced with multiple users with different integration needs, you can add additional API frontends that maintain the boundary between your system and external consumers using the Backend for Frontends pattern.

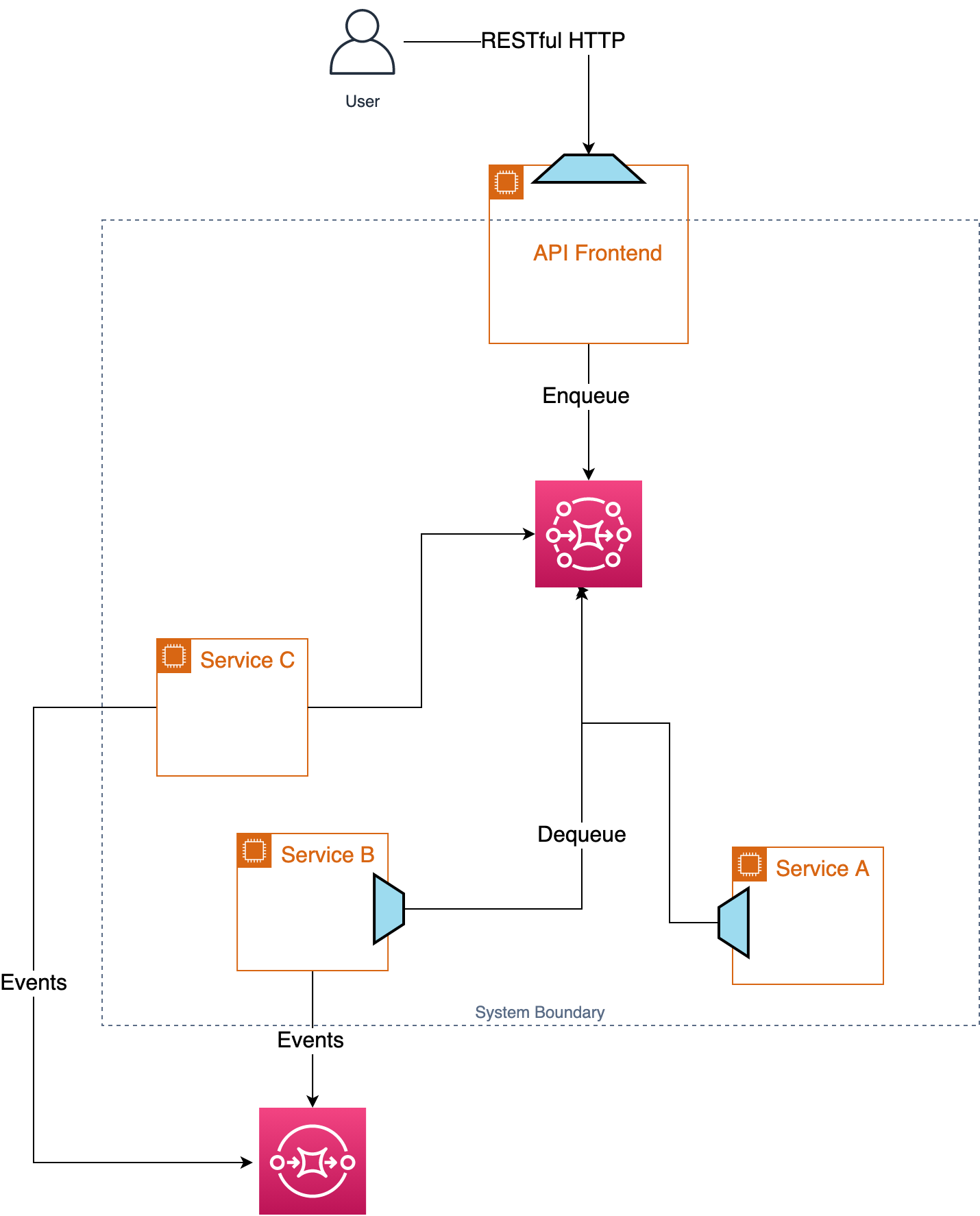

Balancing Load with a Queue

As your service continues to grow in usefulness and users, you may end up with a need to balance load using a queue. Remembering that queues belong in the consumer, you can update your system to include a queue to leverage queue-based load leveling or increase throughput by adding additional queue consumers.

This type of change now makes your RESTful API asynchronous which may require both you and your consumers to make some adjustments in how they interact with your service.

Building a Composable Platform

I’ve framed our short example in the context of a team building up a set of services to address their specific problems by using a RESTful API frontend coupled with an asynchronous event stream as the interface to their system. This interface is independent from the number and configuration of individual microservices within the boundary of the system. As far as outside consumers are concerned, the system is a RESTful API and a series of events. Internal to the team, the choice to use an RPC framework, a queue, or an asynchronous message bus should be treated mostly as an implementation detail. The guidance suggested here is to favour asynchronous communication, where that is not possible to favour RESTful APIs so that we are best positioned to meet the needs of third-party customers, and to treat queues as an implementation detail to help address burstiness and scale and not as an API for others.

The following image shows how two systems can be composed leveraging their API frontends and events to integrate between them.

With each team addressing the APIs to their system in this way, we can start to build up a composable platform of RESTful APIs and Event Streams that a good set of architecture principles:

- No Single point of failure. Queues and/or topics are localized to a bounded context instead of using a global messaging bus. This also allows for a scalable architecture using z-scaling without having to replicate our entire system.

- Isolate faults. By separating private details into your local system boundary, any faults are localized to the bounded context, limiting the blast radius for failure.

- Asynchronous design. This design supports an asynchronous and event-driven design.

- Stateless. API Frontends (also called Backends for Frontends or BFFs) are naturally stateless and scalable using. Backends for Frontends can be combined with scalable, stateless workers in Competing Consumer or Queue-Based load leveling pattern.

- Scale out, not up. BFFs and queue-based load levelling are naturally scalable. Splitting RPC traffic out of a global NATS allows our organization to scale out more effectively than relying on scaling up usage of NATS indefinitely.

- Think external first. BFFs at each edge of a Bounded Context support third-party APIs. APIs you expose to others are designed intentionally and support open standards.’