Amazon’s Elastic Compute Cloud (EC2). EC2 is a web service that provides resizable, on-demand computing capacity — literally, servers in Amazon’s data centers — that you use to build and host your software.

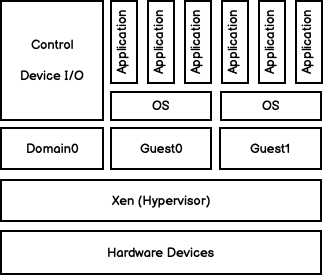

It’s important to understand that EC2 is a virtual computing environment. In a virtual environment, there is one physical server with all of the necessary hardware — CPU, memory, hard disk, network controller and more. This single physical server can host multiple operating systems and applications through a hypervisor that runs directly on top of the physical machine. The following simplified diagram shows a hypervisor architecture based on the Xen project: Xen interfaces directly with the hardware, and has a special control domain (dom0) for interfacing with Xen. Domain 0 is designed to access hardware directly and manage devices and device drivers. It has the ability to talk to the hypervisor to instruct it to start and stop guest VMs.

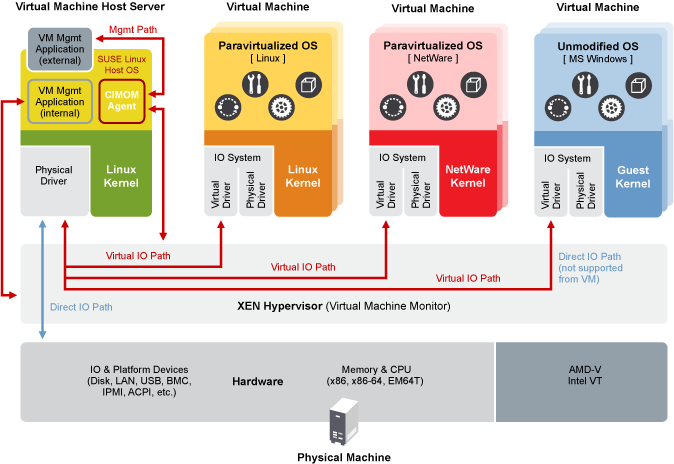

Once a Guest domain is provisioned, it operates as an isolated environment, communicating with the physical hardware through domain 0 (dom0) via virtualized devices that operate just like the real thing. From the point of view of the application in a guest domain, all access to physical hardware is transparent — the virtual device behaves just like the real thing, and the hypervisor architecture takes care of passing I/O requests between physical and virtual devices. The following diagram, from SUSE’s documentation on virtualization, shows the interaction between virtual machine guests and the host system.

Because virtualization is such a core component of cloud computing, AWS and other cloud companies have spent significant resources making sure it is as performant as possible. One trend to increase performance is to leverage hardware-based virtualization. A guest domain virtualized through hardware is called a “hardware virtual machine” or HVM. HVMs allow guest domains to run with minimal software virtualization. The hardware components allow for the direct creation and use of isolated I/O for each guest domain using physical hardware.

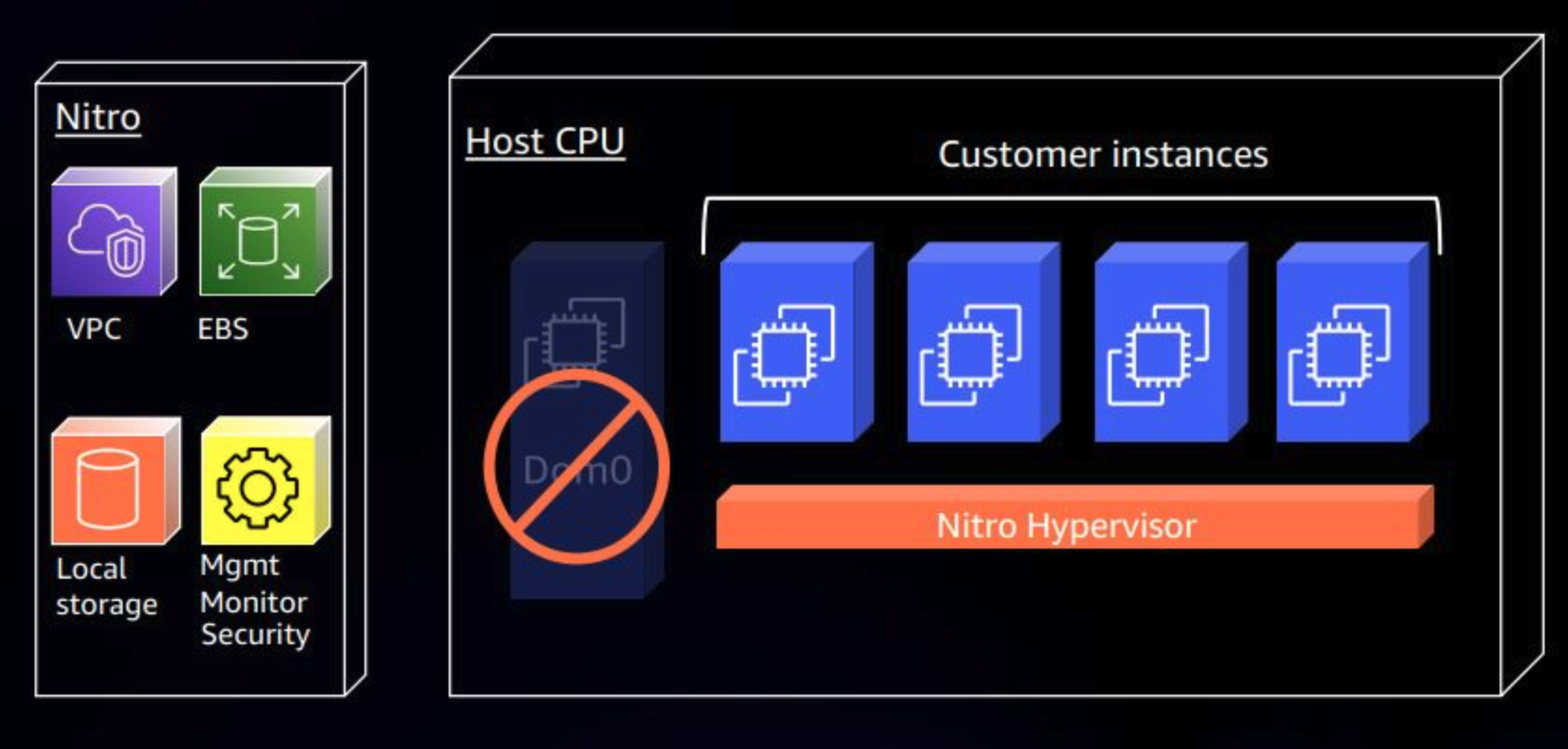

The latest release of Amazon’s EC2 virtualization uses hardware-based virtualization it calls Nitro. Nitro is a custom virtualization framework developed at AWS consisting of a thin hypervisor that manages memory and CPU allocation for guest domains, alongside physical hardware providing storage, security chip, and networking.

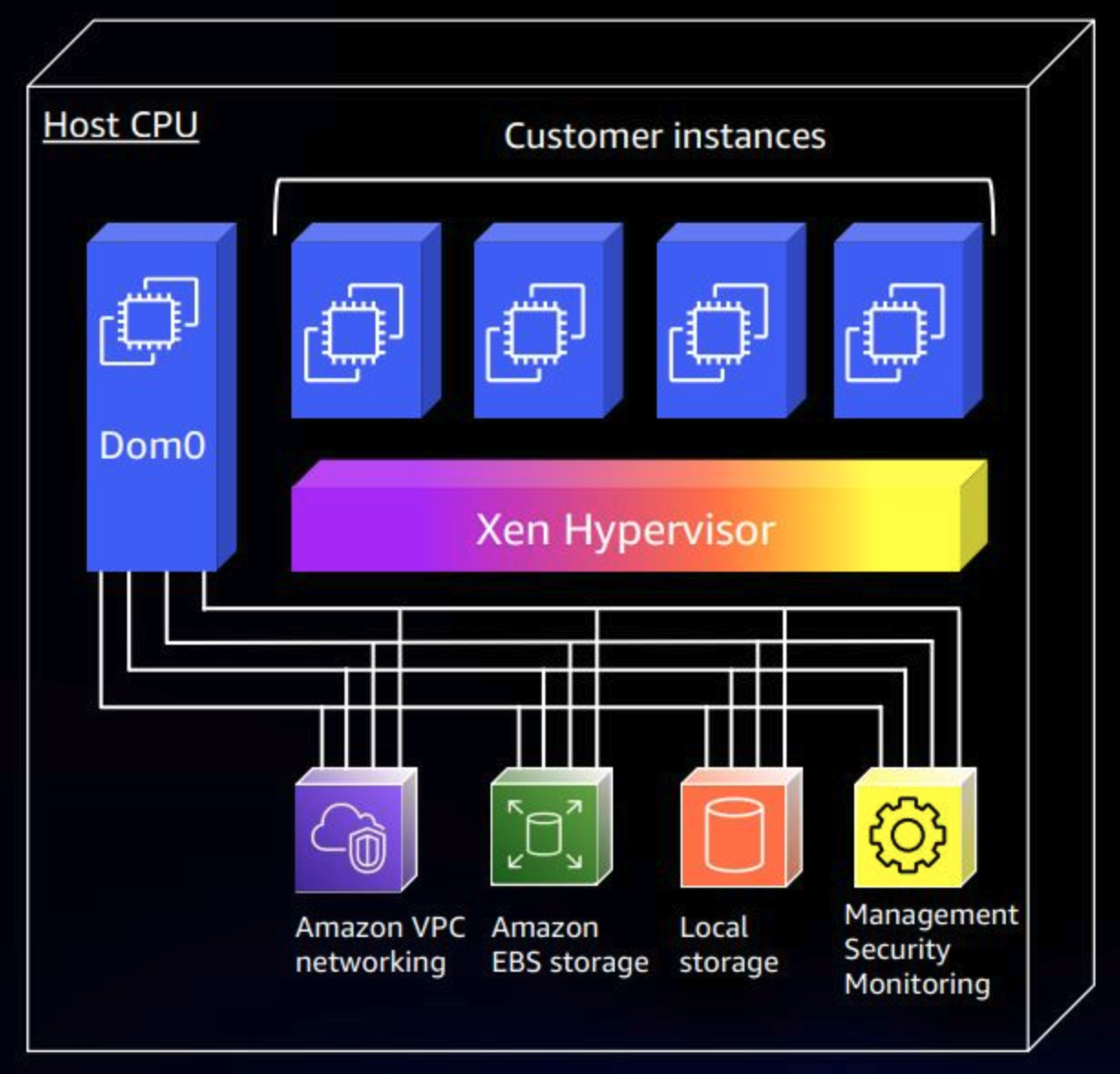

Prior to the Nitro system, AWS used the Xen hypervisor. With Xen the VPC networking, EBS storage, local storage, and all management functions happen at the dom0 level. A perhaps simpler way to look at this is that the same CPU that is being provisioned for guests to use as EC2 instances is also having to handle the networking, storage, and instance management, leaving less overall CPU for each guest.

With Nitro, AWS is using physical cards to handle VPC, EBS, local storage, and security and management. Since physical cards handle this, it no longer needs to have the host CPU handle involved. Because of this change, the functions in dom0 are offloaded so the hypervisor can be focused on just providing CPU and memory provisioning to EC2 instances.

Moving storage, networking, and security functions to hardware means that the hypervisor can make just about all of the host’s CPU power and memory available to the guest operating systems since the hypervisor plays a greatly diminished role. This also means that the hypervisor needs to perform less work for each request, delivering higher overall performance. Brendan Gregg, former performance engineer at Netflix, calls hardware virtualization “near bare-metal”, with only between 0.1% and 1.5% overhead for typical workloads.