With so many people running microservice workloads, it is inevitable that organizations keep bumping into the same set of problems: state management, resiliency, event-handling, and more. Dapr exists to help codify the best practices for building microservice applications into building blocks that enable you to build portable applications with the language and framework of your choice. Each building block is completely independent and you can use one, some, or all of them in your application to solve common microservice problems.

Building Blocks

Dapr provides best practices for building microservice applications using common capabilities. These common capabilities are provided over a standard API that Dapr calls building blocks that provide independent pieces of functionality to your application.

| Building Block | Description |

|---|---|

| Service Invocation | Resilient service-to-service invocation enables method calls, including retries, on remote services wherever they are located in the supported hosting environment. |

| State Management | With state management for storing key/value pairs, long running, highly available, stateful services can be easily written alongside stateless services in your application. The state store is pluggable and can include Azure CosmosDB, Azure SQL Server, PostgreSQL, AWS DynamoDB or Redis among others. |

| Publish and Subscribe Messaging | Publishing events and subscribing to topics between services enables event-driven architectures to simplify horizontal scalability and make them |

| Resource Bindings | Resource bindings with triggers builds further on event-driven architectures for scale and resiliency by receiving and sending events to and from any external source such as databases, queues, file systems, etc. |

| Actors | A pattern for stateful and stateless objects that make concurrency simple with method and state encapsulation. Dapr provides many capabilities in its actor runtime including concurrency, state, life-cycle management for actor activation/deactivation and timers and reminders to wake-up actors. |

| Observability | Dapr emit metrics, logs, and traces to debug and monitor both Dapr and user applications. Dapr supports distributed tracing to easily diagnose and serve inter-service calls in production using the W3C Trace Context standard and Open Telemetry to send to different monitoring tools. |

| Secrets | Dapr provides secrets management and integrates with public cloud and local secret stores to retrieve the secrets for use in application code. |

Each of these building blocks provides the API that your application will interact with. The building block APIs are implemented using components.

Components

Components deliver a consistent implementation for building blocks. A component has a defined interface that can be implemented by a variety of backend technologies. For example, the state component provides a common way to interact with data storage systems. Any storage system can be used (DynamoDB, Redis, SQL Server) as long as the component interface is implemented. As of this writing, a compliant state store needs to implement one or more interfaces: Store and TransactionalStore.

The interface for Store:

type Store interface {

Init(metadata Metadata) error

Delete(req *DeleteRequest) error

BulkDelete(req []DeleteRequest) error

Get(req *GetRequest) (*GetResponse, error)

Set(req *SetRequest) error

BulkSet(req []SetRequest) error

}

The interface for TransactionalStore:

type TransactionalStore interface {

Init(metadata Metadata) error

Multi(reqs []TransactionalRequest) error

}

So, for example, by implementing this API using DynamoDB as a backing storage system, you allow Dapr to use the State component backed by DynamodDB to fulfill the State Management Building Block.

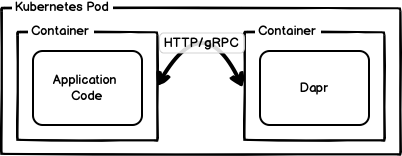

Sidecar Architecture

The claim of Dapr being a portable runtime is achieved by leveraging a sidecar architecture, allowing Dapr to deployed alongside application code without needing to make changes to the application itself. Sidecars are typically associated with running containers in Kubernetes, and this arrangement is depicted in the following figure. In this architecture, your application running in a container communicates to a separate container running Dapr over an HTTP or gRPC API. Because both your application code and Dapr are running in the same Kubernetes Pod, the HTTP or gRPC request is a local network call and is resolved on the same host.

Installing Dapr in Kubernetes

To apply what we’ve covered so far, let’s build a basic application using

Dapr and running on Kubernetes. This example should show more clearly how

the interaction between Building Blocks, Components, and the Dapr sidecar

API work in practice. I’ll use the built-in Kubernetes support for

Docker, but if you

already have a Kubernetes cluster set up for development go ahead and use

that instead. From here on out, I will assume that you have kubectl

installed and that it is configured to communicate with a running

Kubernetes cluster (either locally or elsewhere). If you need help with

that step, follow the

kubectl

documentation for installing the Kubernetes CLI, and

minikube for installing

a local Kubernetes test cluster.

To deploy Dapr to Kubernetes, Dapr requires two extra services: the

dapr-sidecar-injector to add the Dapr sidecar to Pods in Kubernetes, and

the dapr-operator to update components provisioned within the cluster.

In addition, two other services provide additional functionality if

required: dapr-sentry provides mutual tTLS support between Dapr sidecar

instances and dapr-placement used to place actors on Pods if your

application uses the Actor model.

The simplest way to deploy Dapr to Kubernetes is using the Dapr CLI init

command with a -k flag. This command looks at your local kubectl

configuration and installs Dapr into the cluster defined by your

configuration. We can run the following commands to get the CLI and then

use the CLI to install Dapr

$ brew install dapr/tap/dapr-cli

$ dapr init -k

You can verify your installation with the dapr status command:

$ dapr status -k

NAME NAMESPACE HEALTHY STATUS VERSION AGE CREATED

dapr-sentry dapr-system True Running 0.10.0 16s 2020-09-02 13:31.33

dapr-operator dapr-system True Running 0.10.0 16s 2020-09-02 13:31.33

dapr-placement dapr-system True Running 0.10.0 16s 2020-09-02 13:31.33

dapr-sidecar-injector dapr-system True Running 0.10.0 16s 2020-09-02 13:31.33

An alternative way to verify your installation is to use the kubectl

tool to list the running Services in your cluster under the dapr-system

namespace:

$ kubectl get pods -n dapr-system -w

NAME READY STATUS RESTARTS AGE

dapr-dashboard-6c6c45df7b-8xxtc 1/1 Running 0 7m30s

dapr-operator-d7fb8dc96-75t9k 1/1 Running 0 7m30s

dapr-placement-59cb877cc5-5qn9g 1/1 Running 0 7m30s

dapr-sentry-d65788756-x494n 1/1 Running 0 7m30s

dapr-sidecar-injector-6df4dcd945-bhljw 1/1 Running 0 7m30s

After installation, any new Pods deployed to Kubernetes will have a Dapr

sidecar attached to them by the dapr-sidecar-injector. The sidecar

injector is an implementation of Kubernete’s Admission

Controllers

that intercepts requests to the Kubernetes API server and mutates that

request. In our case, the mutation is to add the Dapr sidecar to the Pod.

Deploying a Dapr Component

With Dapr installed in Kubernetes, we can begin building out our application. For this example, we will use a Dapr state store component implemented using Redis. The easiest way to deploy Redis to Kubernetes is by using Helm. The chart for Redis is maintained by Bitnami and we can add the Bitnami chart to Helm, and then install Redis using the following commands on macOS:

$ brew install helm # If you do not have Helm installed

# helm repo add bitnami https://charts.bitnami.com/bitnami

# helm repo update

# helm install redis bitnami/redis

Or the following on Linux:

$ curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3

$ chmod 700 get_helm.sh

$ ./get_helm.sh

$ helm repo add bitnami https://charts.bitnami.com/bitnami

$ helm install redis bitnami/redis

To make the Redis implementation of the State Store Component available to

Dapr applications, we define a yaml file with kind: Component. Note the

metadata field has name: redis to make the component easy to

understand. The spec field shows the Redis specific information. The

metadata in this example corresponds to the default configuration that is

applied by the Redis Helm chart. The host is defaulted to

redis-master.default.svc.cluster.local running on port 6379 and the

password is installed as a secret key that can be viewed with the kubectl describe secret redis command.

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: redis

spec:

type: state.redis

metadata:

- name: redisHost

value: redis-master.default.svc.cluster.local:6379

- name: redisPassword

secretKeyRef:

name: redis

key: redis-password

We can apply the Redis component to Kubernetes using kubectl. This will

trigger the dapr-operator to register this component as available to

applications using Dapr.

$ kubectl apply -f redis.yaml

component.dapr.io/redis created

You can view the component you just deployed using the Dapr dashboard and navigating to the Components section:

$ dapr dashboard -k

Dapr dashboard found in namespace: dapr-system

Dapr dashboard available at: http://localhost:8080

Or by using the Dapr CLI to list the available components:

$ dapr components -k

NAME TYPE AGE CREATED

statestore state.redis 17h 2020-09-02 15:56.27

Deploying a Python Application with the Dapr sidecar

Now that we have a State Store component, we can deploy a Python Dapr application that uses the State Building Block API.

The following example code shows a simple Flask application with a simple

endpoint: /order. Making an HTTP post request to this endpoint will

create an order, while a GET request will return the previously created

order. The data for the order is stored in the Redis state store we

previously created.

import os

import requests

import json

import flask

from flask import request

app = flask.Flask(__name__)

# These ports are injected automatically into the container.

dapr_port = os.getenv("DAPR_HTTP_PORT", 8000)

dapr_grpc_port = os.getenv("DAPR_GRPC_PORT", 5000)

dapr_url = "http://localhost:{}/v1.0/invoke/nodeapp/method/neworder".format(dapr_port) # noqa

state_store_name = "statestore"

state_url = "http://localhost:{}/v1.0/state/{}".format(dapr_port, state_store_name) # noqa

# Port to communicate with this HTTP server

port = 8080

@app.route('/order', methods=['GET'])

def get_orders():

r = requests.get(state_url + "/order")

try:

return r.text

except:

return {}

@app.route('/order', methods=['POST'])

def new_order():

content = request.json

state = [{

"key": "order",

"value": content

}]

r = requests.post(state_url, data=json.dumps(state))

return r.text

@app.route("/")

def ports():

return {

"DAPR_HTTP_PORT": dapr_port,

"DAPR_GRPC_PORT": dapr_grpc_port

}

app.run(host="0.0.0.0", port=8080)

To deploy this application to Kubernetes, we require a Dockerfile to package everything up.

FROM python:3.8-slim-buster

COPY . /app

WORKDIR /app

RUN pip install --upgrade pip

RUN pip install flask requests

ENTRYPOINT ["python"]

EXPOSE 8080

CMD ["app.py"]

And we can build an push the container to your local image store:

$ docker build -t localhost:5000/pyserver:0.1 .

$ docker push localhost:5000/pyserver:0.1

Finally, deploy your application to Kubernetes using the following configuration:

kind: Service

apiVersion: v1

metadata:

name: pyserver

labels:

app: pyserver

spec:

selector:

app: pyserver

ports:

- port: 8080

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: pyserver

labels:

app: pyserver

spec:

replicas: 1

selector:

matchLabels:

app: pyserver

template:

metadata:

labels:

app: pyserver

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "pyserver"

dapr.io/app-port: "8080"

spec:

containers:

- name: pyserver

image: localhost:5000/pyserver:0.1

ports:

- containerPort: 8080

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: pyserver-ingress

spec:

rules:

- http:

paths:

- path: /

backend:

serviceName: pyserver

servicePort: 8080

You can use the apply kubectl command for that:

$ kubectl apply -f server.yaml

You can test your application by making POST and GET requests to the

order endpoint.

What’s actually happening here?

In the implementation of the HTTP POST request, we use the requests

library to make a POST request to the state store API. This API is

a generic interface for storing state using a key-value interface. What is

important here is that as far as the application is concerned, the state

store API remains static. In this example, we implemented the state store

API using the Redis datastore. If we like, we can implement it using one

of the many other state store

implementations

without changing the application code.

Dapr runs as a sidecar, meaning that the POST request made by the Flask application is actually directed to the attached sidecar for this Kubernetes Pod. This separation of concerns allows application developers to iterate on their app, while infrastructure or platform operators can focus on the underlying implementation.