Title and Author of Paper

Concurrency Control Performance Modeling: Alternatives and Implications. R. Agrawal et al.

Summary

This paper takes an in-depth look at the performance implications of varying concurrency control algorithms. Specifically, it examines the performance of three concurrency methods: blocking, immediate-restart, and optimistic. In the blocking algorithm, all transactions set locks on objects that are read or written; whenever a lock request is denied, the requesting transaction is placed in a waiting queue until it can proceed (on deadlock, the youngest transaction is restarted). With immediate-restart transactions again acquire locks on objects. In this case, however, if the transaction is blocked it is immediately restarted (with some delay). For the optimistic case, all transactions are allowed to proceed as if no conflicts occur; only if a conflict is detected at commit time is a transaction restarted.

The simulation model

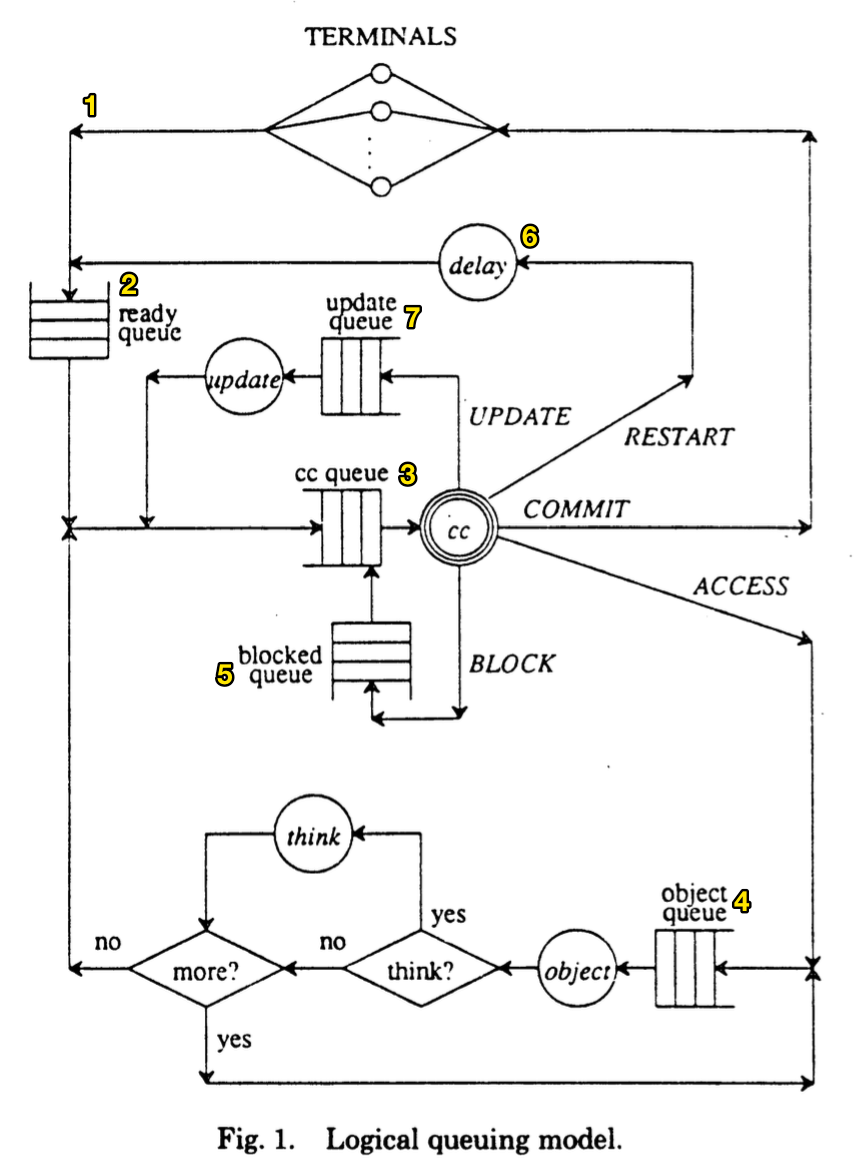

The paper develops a simulation model that encompasses these three concurrency algorithms as reproduced in Figure 1.

In this figure, incoming transactions (1) are placed into a ready queue (2). Once in the queue, transactions are selected for processing and enter the concurrency control queue (3) where it requests access to concurrent resources. If the request is granted, the transaction acquires database data from the object queue (4), where it may acquire one or more objects. in succession (including some processing time). If the access request determines the transaction must block, it is placed in the blocked queue (5). If the transaction requires a restart, it is placed back in the ready queue (2) after some delay (6).

Eventually, the transaction will proceed without conflict and will updates will from that transaction will be committed and written to the database through an update queue (7).

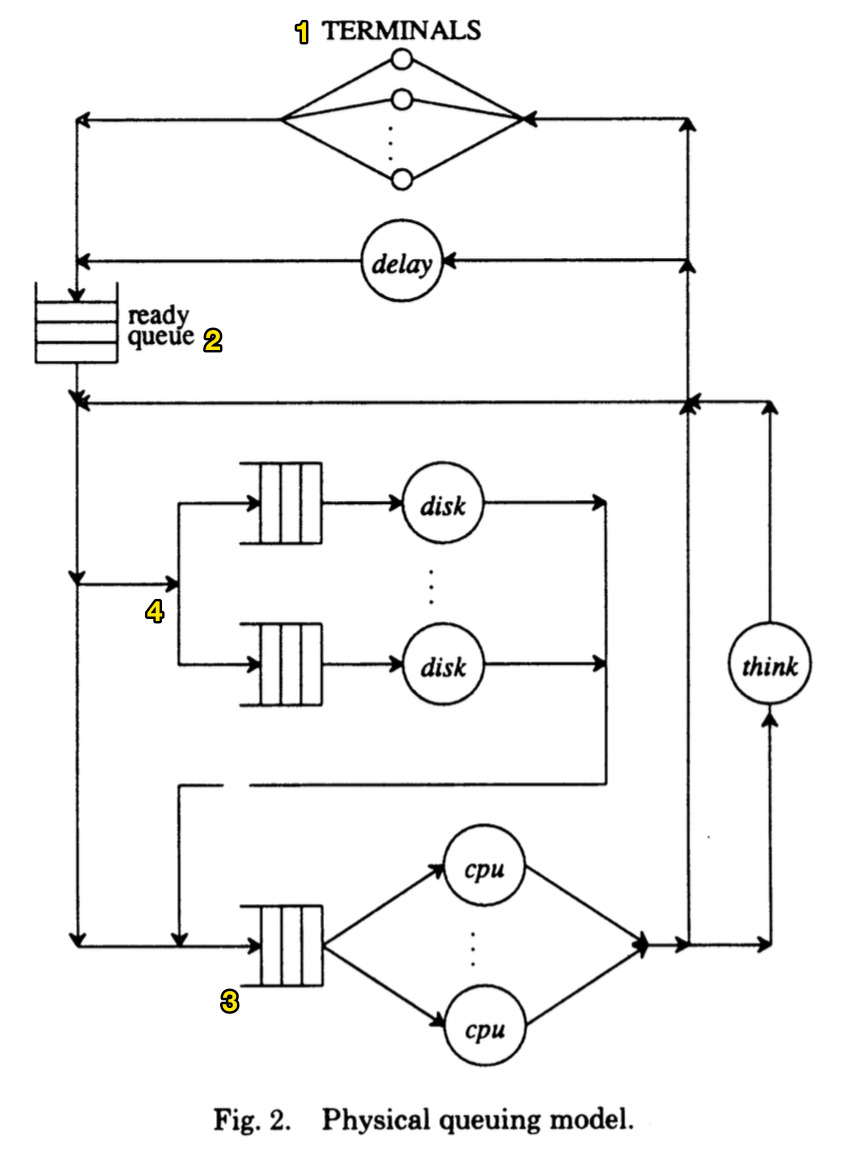

In addition to the logical model of transactions, the paper develops a physical model of CPU and disk resources reproduced in Figure 2.

The database is physically modelled as a collection of terminals (1) where transactions are entered. Each transaction is placed in a ready queue (2). If a transaction requires CPU access it is assigned to any free CPU (3). Otherwise, it waits for a CPU to become free. The CPU queue can be viewed as a global queue representing all CPU resources. I/O is modelled using a queue for each disk. When a transaction requires I/O, it chooses a disk at random and waits in a queue associated with the chosen disk (4).

Simulation results

Using the model developed, a simulation of each concurrency control algorithm using varying numbers of resources is performed at different concurrency (or multi-programming) levels.

It is shown that, at infinite resource levels, the optimistic method outperforms blocking, while with scarce resources blocking outperforms optimistic. That is, if you expect high resource utilization of your hardware, blocking is a better choice, if you expect low resource utilization, optimistic concurrency is a better choice. Ultimately, there is no one-size-fits-all solution and different concurrency models are better for different workloads and environments.