AWS provides four different storage options for your Kubernetes cluster: EBS, EFS, FSx for Lustre, and Amazon File Cache. Each of these CSI drivers has different performance characteristics, depending on your workload. This post quantifies those performance differences using the flexible I/O tester FIO.

Note: For an overview of the different CSI options available on AWS, see Picking the right AWS CSI driver for your Kubernetes application.

Before we start, please note that these results come with multiple caveats, and you should absolutely test performance on your own workloads before making any final decisions. For example, a workload with many random reads is typically latency sensitive and would benefit from higher IOPS, whereas a workload consisting of mostly streaming reads is typically throughput sensitive and would benefit from higher bandwidth and at large I/O sizes with relatively lower IOPS.

With that said, let’s get on with the test!

Cluster Setup

To run this test, I created an EKS cluster using the eksctl tool. Make sure to setup IAM roles for service accounts. This capability sets up an admission controller in EKS that injects AWS session credentials into Pods so that they can access AWS services. I used this to grant access to the correct AWS services to the CSI drivers during the test.

After provisioning an EKS cluster, I installed each of the CSI drivers according to the documentation provided. Mostly this involved creating the correct service accounts and installing the driver using either a Helm chart or Kubernetes manifest.

Because AWS File Cache uses Lustre under the hood, I compared the following drivers.

Test Setup

I set a storage class for each CSI driver to specify the storage type to provision for the test.

EBS Storage Class

I created two EBS storage classes. One for provisioning io2 volumes and

another for provisioning gp3 volumes. Both storage classes were

configured to use 50 IOPS per GB of storage.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: aws-csi-driver-benchmark-ebs-io2-sc # can change io2 to gp3

provisioner: ebs.csi.aws.com

volumeBindingMode: WaitForFirstConsumer

parameters:

type: io2 # can change io2 to gp3

iopsPerGB: "50"

encrypted: "true"

EFS Storage Class

For EFS, I manually created an Elastic File System and recorded the file system identifier.

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: aws-csi-driver-benchmark-efs-sc

provisioner: efs.csi.aws.com

parameters:

provisioningMode: efs-ap

fileSystemId: <your-file-system-id-here>

directoryPerms: "700"

basePath: "/dynamic_provisioner" # optional

subPathPattern: "${.PVC.namespace}/${.PVC.name}" # optional

FSx Volume Claim

Lastly, I used the SCRATCH_1 an SSD storage type for Lustre.

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: aws-csi-driver-benchmark-fsx-sc

provisioner: fsx.csi.aws.com

parameters:

subnetId: <lustre-subnet-id>

securityGroupIds: <lustre-security-groups>

deploymentType: SCRATCH_1

storageType: SSD

Then, I configured a Kubernetes Job to mount a PersistentVolumeClaim, using the

different storage classes specifications.

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: aws-csi-driver-benchmark-pvc

spec:

storageClassName: aws-csi-driver-benchmark-efs-sc

# storageClassName: aws-csi-driver-benchmark-ebs-gp3-sc

# storageClassName: aws-csi-driver-benchmark-ebs-io2-sc

# storageClassName: aws-csi-driver-benchmark-fsx-sc

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 60Gi

---

apiVersion: batch/v1

kind: Job

metadata:

name: aws-csi-driver-benchmark-job

spec:

template:

spec:

containers:

- name: aws-csi-driver-benchmark

image: <aws-account>.dkr.ecr.<aws-region>.amazonaws.com/sookocheff/benchmark:main

imagePullPolicy: Always

env:

- name: MOUNTPOINT

value: /data

volumeMounts:

- name: aws-csi-driver-benchmark-pv

mountPath: /data

restartPolicy: Never

volumes:

- name: aws-csi-driver-benchmark-pv

persistentVolumeClaim:

claimName: aws-csi-driver-benchmark-pvc

backoffLimit: 4

The Dockerfile to execute was adapted from the DBench project that executes the following script on Pod entry.

#!/usr/bin/env sh

set -e

if [ -z $MOUNTPOINT ]; then

MOUNTPOINT=/tmp

fi

if [ -z $FIO_SIZE ]; then

FIO_SIZE=2G

fi

if [ -z $FIO_OFFSET_INCREMENT ]; then

FIO_OFFSET_INCREMENT=500M

fi

if [ -z $FIO_DIRECT ]; then

FIO_DIRECT=0

fi

echo Working dir: $MOUNTPOINT

echo

echo Testing Read IOPS...

READ_IOPS=$(fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=$FIO_DIRECT --gtod_reduce=1 --name=read_iops --filename=$MOUNTPOINT/fiotest --bs=4K --iodepth=64 --size=$FIO_SIZE --readwrite=randread --time_based --ramp_time=2s --runtime=15s)

echo "$READ_IOPS"

READ_IOPS_VAL=$(echo "$READ_IOPS"|grep -E 'read ?:'|grep -Eoi 'IOPS=[0-9k.]+'|cut -d'=' -f2)

echo

echo

echo Testing Write IOPS...

WRITE_IOPS=$(fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=$FIO_DIRECT --gtod_reduce=1 --name=write_iops --filename=$MOUNTPOINT/fiotest --bs=4K --iodepth=64 --size=$FIO_SIZE --readwrite=randwrite --time_based --ramp_time=2s --runtime=15s)

echo "$WRITE_IOPS"

WRITE_IOPS_VAL=$(echo "$WRITE_IOPS"|grep -E 'write:'|grep -Eoi 'IOPS=[0-9k.]+'|cut -d'=' -f2)

echo

echo

echo Testing Read Bandwidth...

READ_BW=$(fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=$FIO_DIRECT --gtod_reduce=1 --name=read_bw --filename=$MOUNTPOINT/fiotest --bs=128K --iodepth=64 --size=$FIO_SIZE --readwrite=randread --time_based --ramp_time=2s --runtime=15s)

echo "$READ_BW"

READ_BW_VAL=$(echo "$READ_BW"|grep -E 'read ?:'|grep -Eoi 'BW=[0-9GMKiBs/.]+'|cut -d'=' -f2)

echo

echo

echo Testing Write Bandwidth...

WRITE_BW=$(fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=$FIO_DIRECT --gtod_reduce=1 --name=write_bw --filename=$MOUNTPOINT/fiotest --bs=128K --iodepth=64 --size=$FIO_SIZE --readwrite=randwrite --time_based --ramp_time=2s --runtime=15s)

echo "$WRITE_BW"

WRITE_BW_VAL=$(echo "$WRITE_BW"|grep -E 'write:'|grep -Eoi 'BW=[0-9GMKiBs/.]+'|cut -d'=' -f2)

echo

echo

echo Testing Read Latency...

READ_LATENCY=$(fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=$FIO_DIRECT --name=read_latency --filename=$MOUNTPOINT/fiotest --bs=4K --iodepth=4 --size=$FIO_SIZE --readwrite=randread --time_based --ramp_time=2s --runtime=15s)

echo "$READ_LATENCY"

READ_LATENCY_VAL=$(echo "$READ_LATENCY"|grep ' lat.*avg'|grep -Eoi 'avg=[0-9.]+'|cut -d'=' -f2)

echo

echo

echo Testing Write Latency...

WRITE_LATENCY=$(fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=$FIO_DIRECT --name=write_latency --filename=$MOUNTPOINT/fiotest --bs=4K --iodepth=4 --size=$FIO_SIZE --readwrite=randwrite --time_based --ramp_time=2s --runtime=15s)

echo "$WRITE_LATENCY"

WRITE_LATENCY_VAL=$(echo "$WRITE_LATENCY"|grep ' lat.*avg'|grep -Eoi 'avg=[0-9.]+'|cut -d'=' -f2)

echo

echo

echo Testing Read Sequential Speed...

READ_SEQ=$(fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=$FIO_DIRECT --gtod_reduce=1 --name=read_seq --filename=$MOUNTPOINT/fiotest --bs=1M --iodepth=16 --size=$FIO_SIZE --readwrite=read --time_based --ramp_time=2s --runtime=15s --thread --numjobs=4 --offset_increment=$FIO_OFFSET_INCREMENT)

echo "$READ_SEQ"

READ_SEQ_VAL=$(echo "$READ_SEQ"|grep -E 'READ:'|grep -Eoi '(aggrb|bw)=[0-9GMKiBs/.]+'|cut -d'=' -f2)

echo

echo

echo Testing Write Sequential Speed...

WRITE_SEQ=$(fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=$FIO_DIRECT --gtod_reduce=1 --name=write_seq --filename=$MOUNTPOINT/fiotest --bs=1M --iodepth=16 --size=$FIO_SIZE --readwrite=write --time_based --ramp_time=2s --runtime=15s --thread --numjobs=4 --offset_increment=$FIO_OFFSET_INCREMENT)

echo "$WRITE_SEQ"

WRITE_SEQ_VAL=$(echo "$WRITE_SEQ"|grep -E 'WRITE:'|grep -Eoi '(aggrb|bw)=[0-9GMKiBs/.]+'|cut -d'=' -f2)

echo

echo

echo Testing Read/Write Mixed...

RW_MIX=$(fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=$FIO_DIRECT --gtod_reduce=1 --name=rw_mix --filename=$MOUNTPOINT/fiotest --bs=4k --iodepth=64 --size=$FIO_SIZE --readwrite=randrw --rwmixread=75 --time_based --ramp_time=2s --runtime=15s)

echo "$RW_MIX"

RW_MIX_R_IOPS=$(echo "$RW_MIX"|grep -E 'read ?:'|grep -Eoi 'IOPS=[0-9k.]+'|cut -d'=' -f2)

RW_MIX_W_IOPS=$(echo "$RW_MIX"|grep -E 'write:'|grep -Eoi 'IOPS=[0-9k.]+'|cut -d'=' -f2)

echo

echo

echo All tests complete.

echo

echo ==================

echo = Benchmark Summary =

echo ==================

echo "Random Read/Write IOPS: $READ_IOPS_VAL/$WRITE_IOPS_VAL. BW: $READ_BW_VAL / $WRITE_BW_VAL"

echo "Average Latency (usec) Read/Write: $READ_LATENCY_VAL/$WRITE_LATENCY_VAL"

echo "Sequential Read/Write: $READ_SEQ_VAL / $WRITE_SEQ_VAL"

echo "Mixed Random Read/Write IOPS: $RW_MIX_R_IOPS/$RW_MIX_W_IOPS"

rm $MOUNTPOINT/fiotest

exit 0

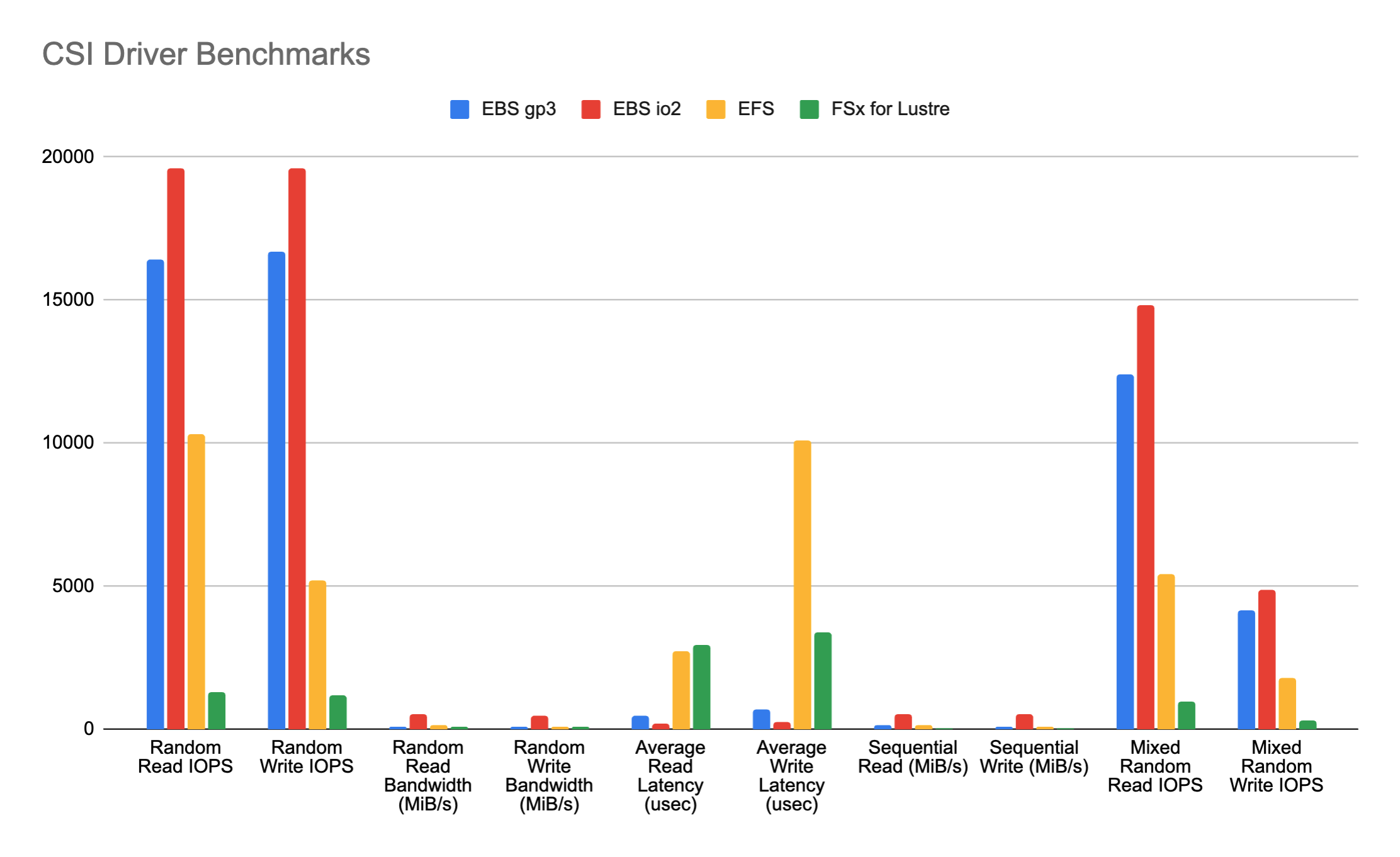

Results

As expected, EBS volumes offer the highest performance, with io2

offering the best bandwidth, lowest latency, and most IOPS performance.

More interesting is the different between AWS FSx for Lustre and Elastic

File System (EFS). EFS and FSx have similar bandwidth. EFS has better

IOPS performance, but worse overall latency than FSx.

The results appear to show that, depending on your workload, you will get similar or better performance using EFS than using FSx for Lustre.

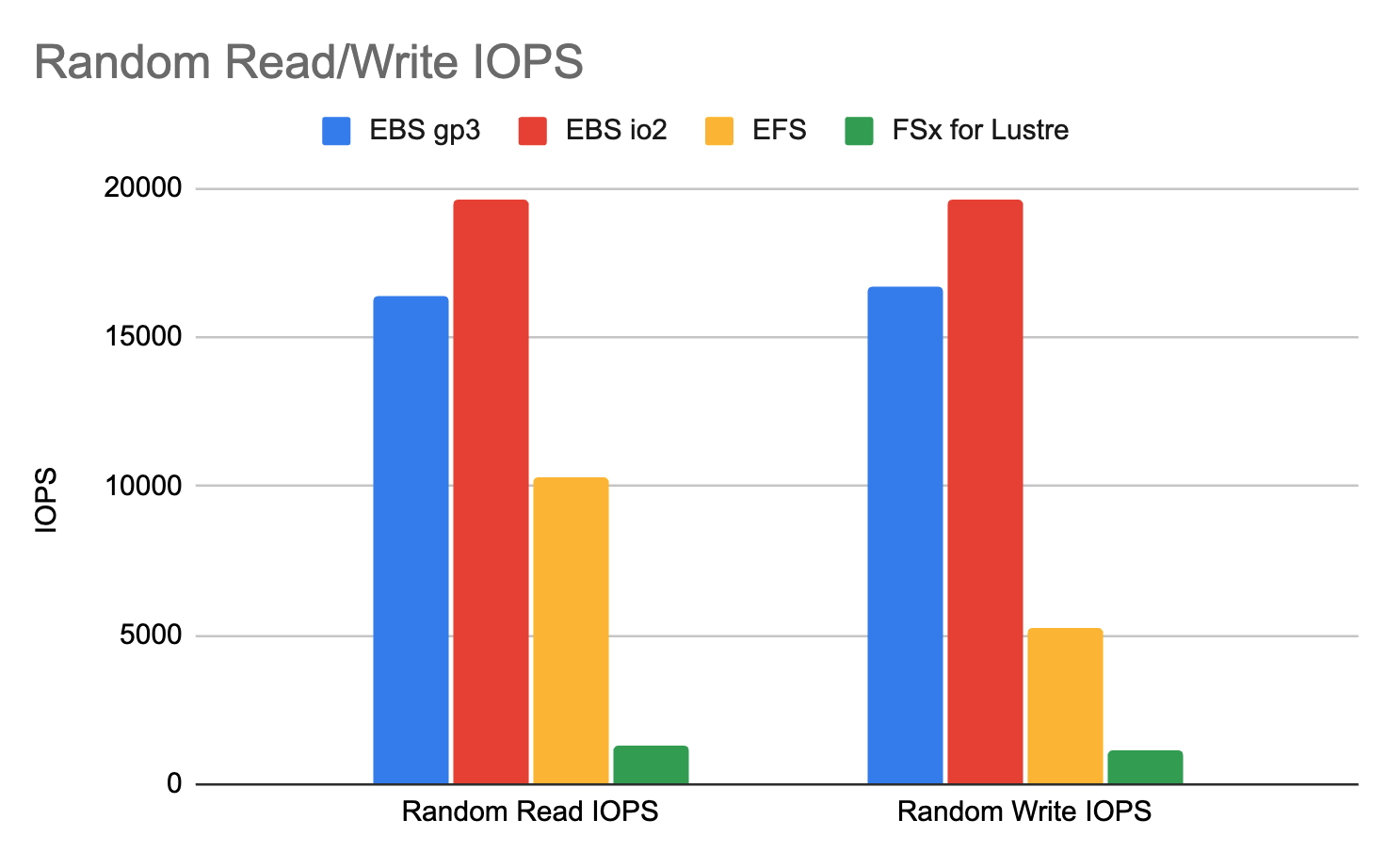

Random Read/Write IOPS

Random read IOPS was tested using the following configuration:

fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=read_iops --filename=$MOUNTPOINT/fiotest --bs=4K --iodepth=64 --size=$FIO_SIZE --readwrite=randread --time_based --ramp_time=2s --runtime=15s

Random write IOPS with the following:

fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=write_iops --filename=$MOUNTPOINT/fiotest --bs=4K --iodepth=64 --size=$FIO_SIZE --readwrite=randwrite --time_based --ramp_time=2s --runtime=15s

EBS consistently has the highest IOPS performance, followed by EFS and FSx.

| Driver | Random Read | Random Write |

|---|---|---|

| EBS gp3 | 16400 | 16700 |

| EBS io2 | 19600 | 19600 |

| EFS | 10300 | 5220 |

| FSx for Lustre | 1317 | 1179 |

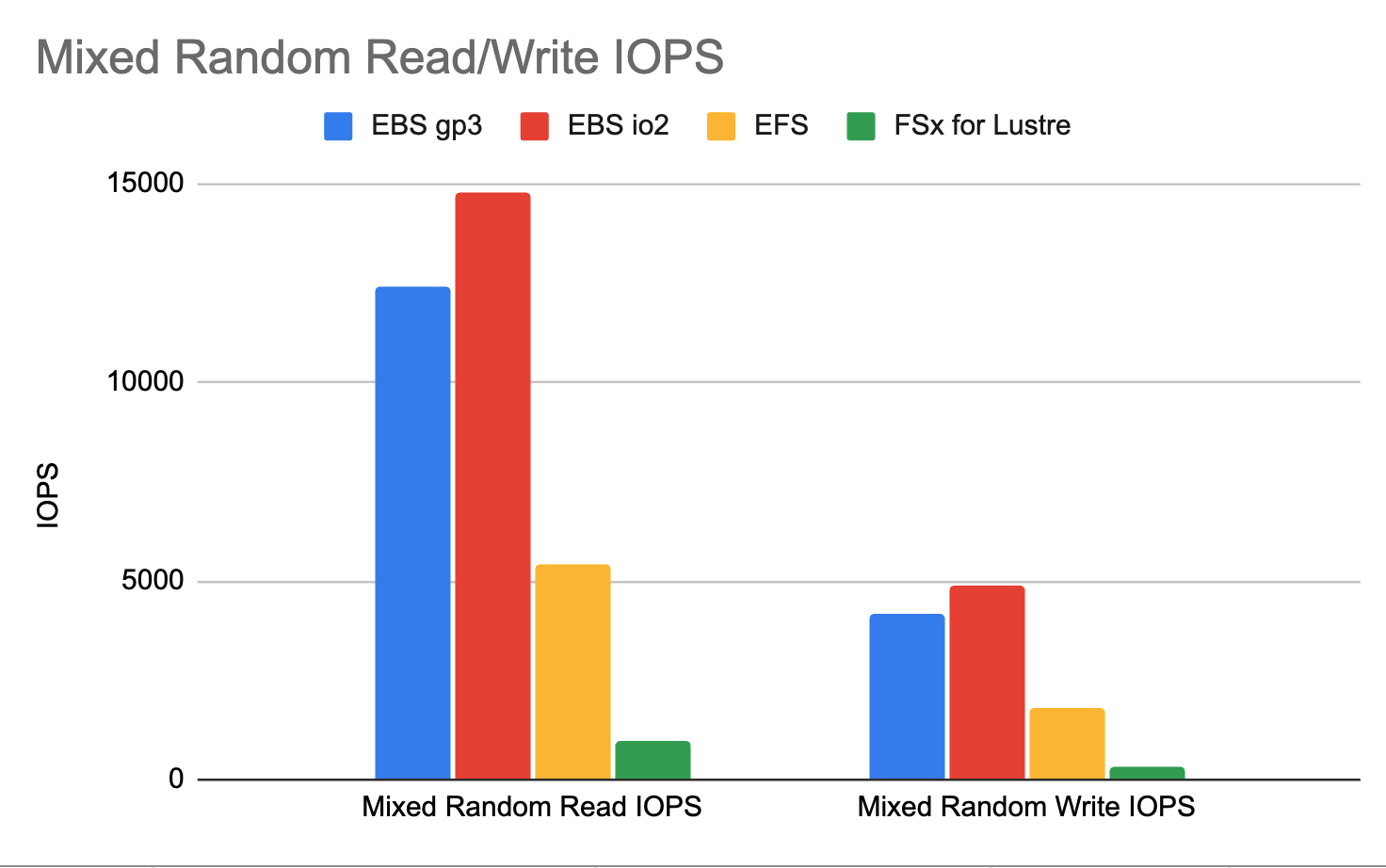

Mixed Random Read/Write IOPS

Mixed random read/write IOPS was measured with:

fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=rw_mix --filename=$MOUNTPOINT/fiotest --bs=4k --iodepth=64 --size=$FIO_SIZE --readwrite=randrw --rwmixread=75 --time_based --ramp_time=2s --runtime=15s

| Driver | Mixed Random Read | Mixed Random Write |

|---|---|---|

| EBS gp3 | 12400 | 4175 |

| EBS io2 | 14800 | 4885 |

| EFS | 5419 | 1785 |

| FSx for Lustre | 951 | 325 |

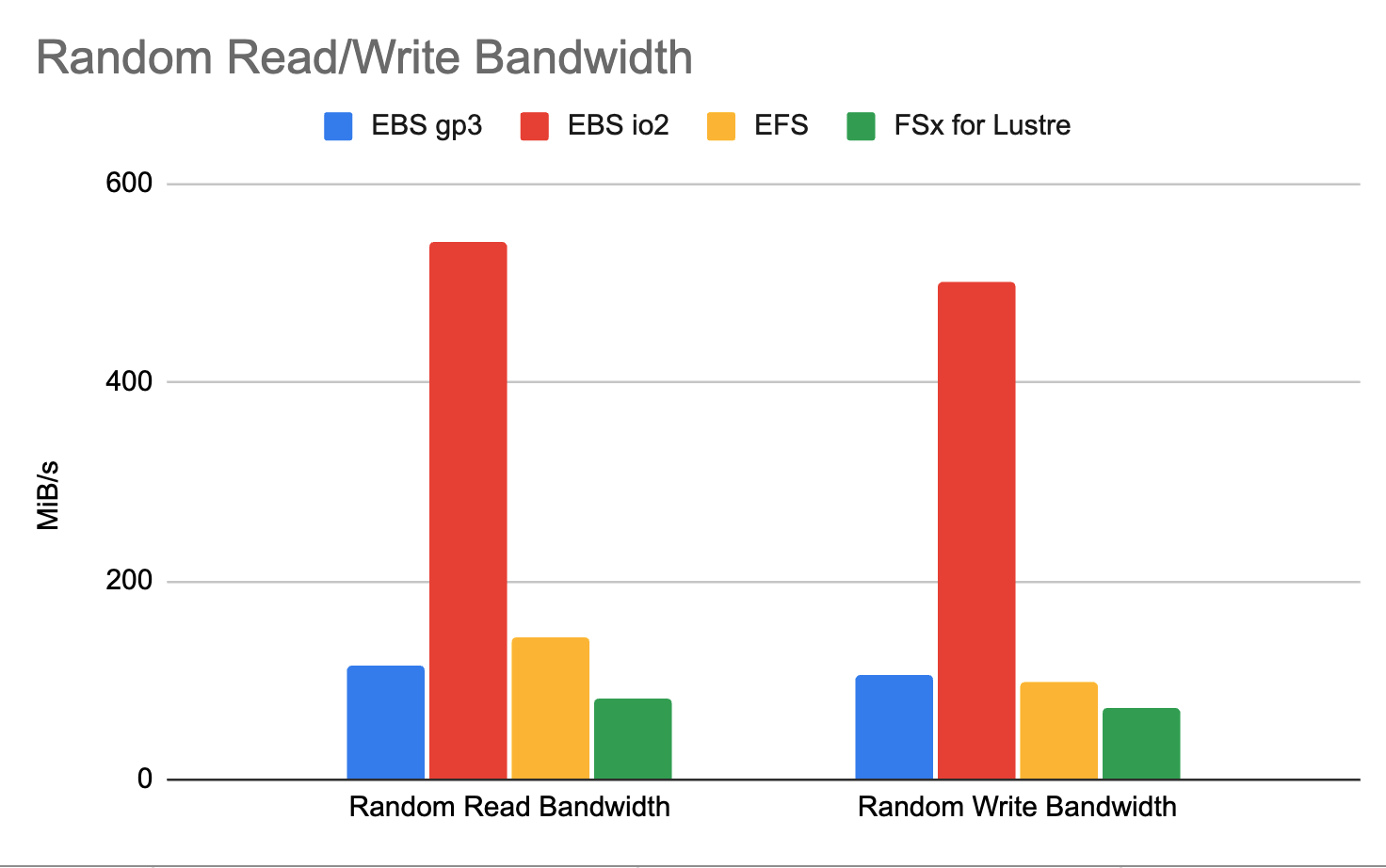

Random Read/Write Bandwidth

Random read/write bandwidth was tested using the following configuration:

fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=read_bw --filename=$MOUNTPOINT/fiotest --bs=128K --iodepth=64 --size=$FIO_SIZE --readwrite=randread --time_based --ramp_time=2s --runtime=15s

fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=write_bw --filename=$MOUNTPOINT/fiotest --bs=128K --iodepth=64 --size=$FIO_SIZE --readwrite=randwrite --time_based --ramp_time=2s --runtime=15s

EFS and EBS with gp3 volumes perform similarly, with EBS io2 volumes significantly faster.

| Driver | Random Read (MiB/s) | Random Write (MiB/s) |

|---|---|---|

| EBS gp3 | 114 | 106 |

| EBS io2 | 542 | 501 |

| EFS | 144 | 99.3 |

| FSx for Lustre | 81.817 | 71.647 |

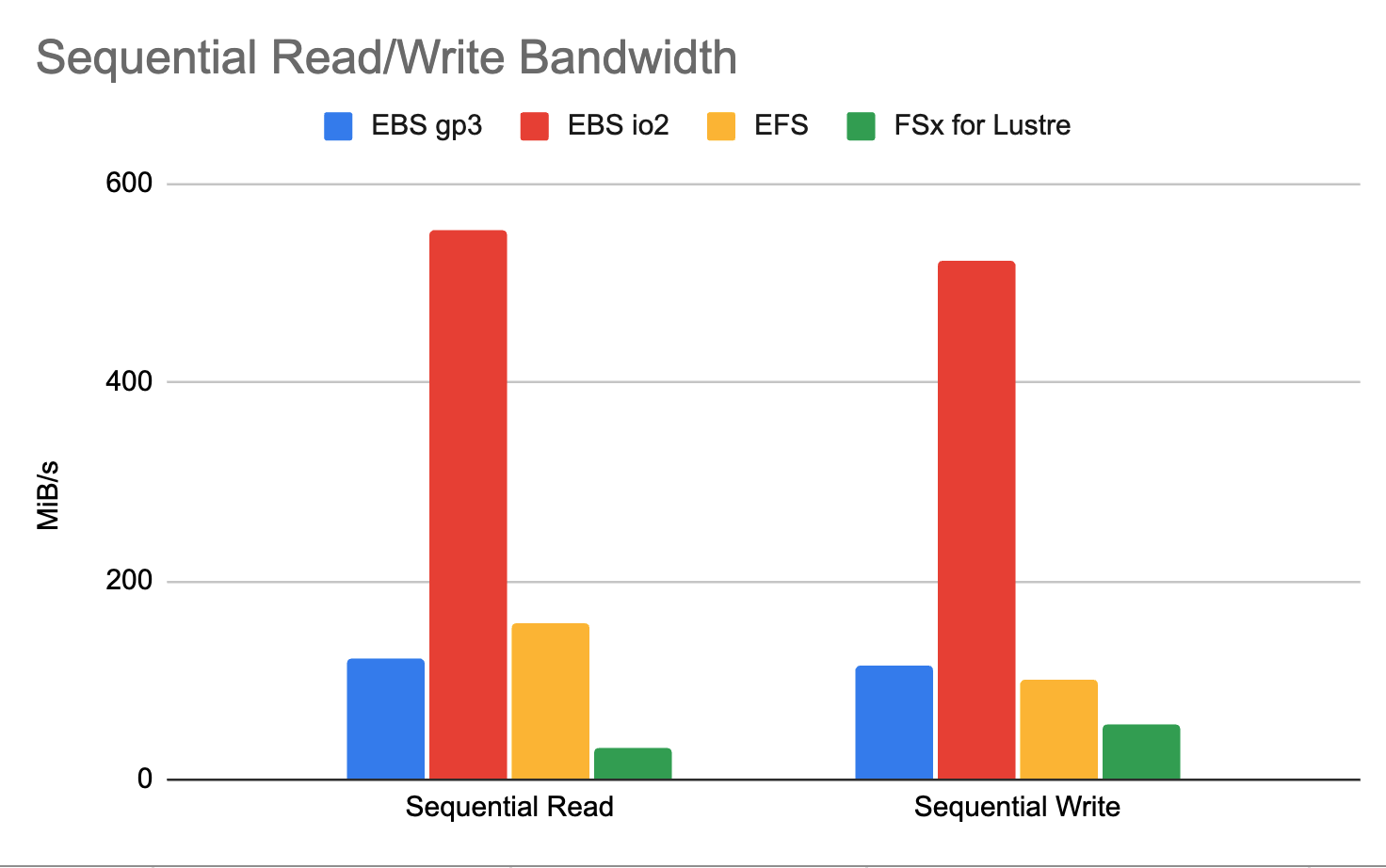

Sequential Read/Write Bandwidth

Sequential read was tested with the following configuration.

fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=read_seq --filename=$MOUNTPOINT/fiotest --bs=1M --iodepth=16 --size=$FIO_SIZE --readwrite=read --time_based --ramp_time=2s --runtime=15s --thread --numjobs=4 --offset_increment=$FIO_OFFSET_INCREMENT

And sequential write with:

fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=write_seq --filename=$MOUNTPOINT/fiotest --bs=1M --iodepth=16 --size=$FIO_SIZE --readwrite=write --time_based --ramp_time=2s --runtime=15s --thread --numjobs=4 --offset_increment=$FIO_OFFSET_INCREMENT

The results show that EBS io2 volumes have substantially higher bandwidth than other options.

| Driver | Sequential Read (MiB/s) | Sequential Write (MiB/s) |

|---|---|---|

| EBS gp3 | 121 | 115 |

| EBS io2 | 554 | 523 |

| EFS | 157 | 101 |

| FSx for Lustre | 30.919 | 55.0742 |

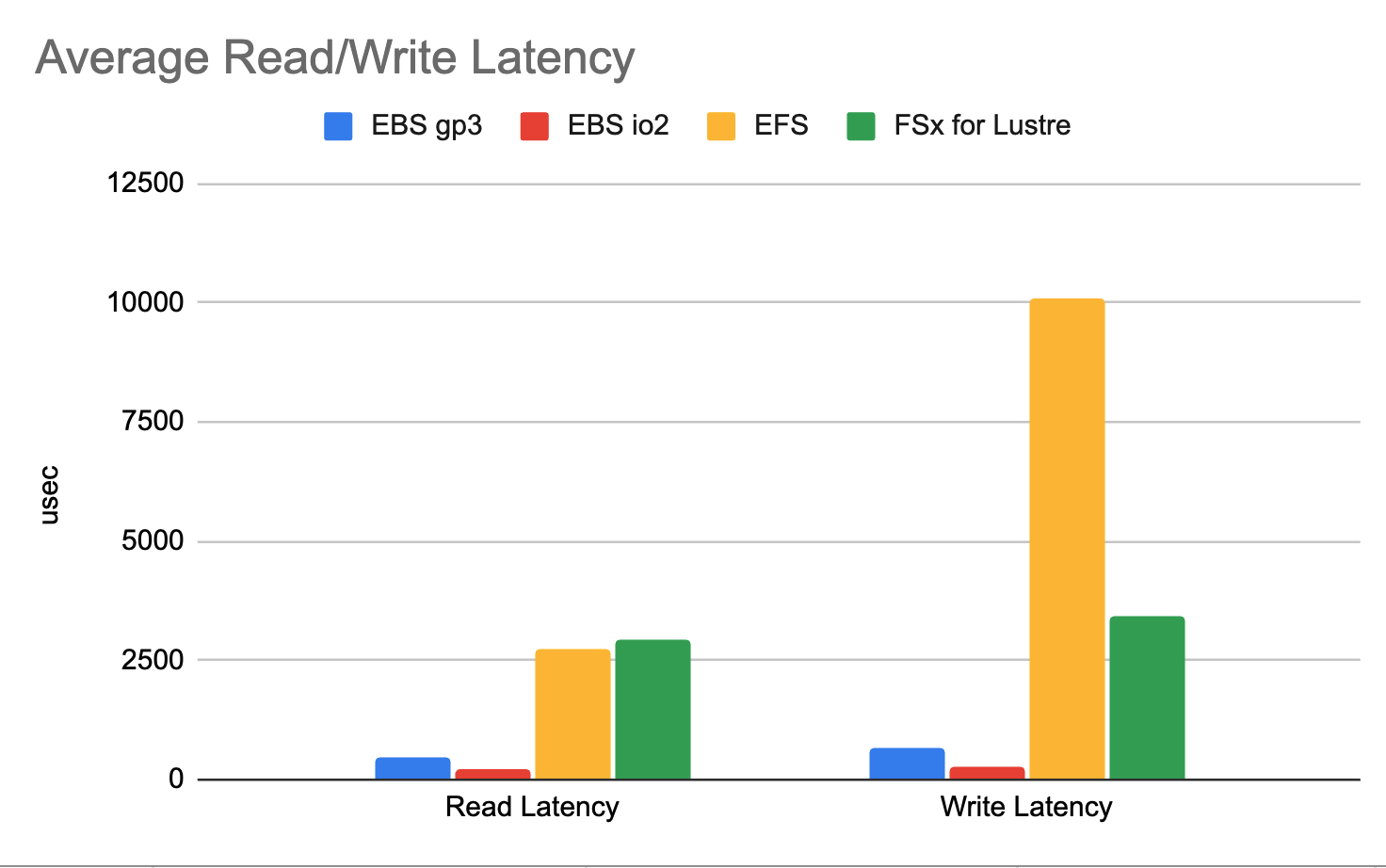

Read/Write Latency

Read latency was tested with:

fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=1 --name=read_latency --filename=$MOUNTPOINT/fiotest --bs=4K --iodepth=4 --size=$FIO_SIZE --readwrite=randread --time_based --ramp_time=2s --runtime=15s

And write latency with:

fio --randrepeat=0 --verify=0 --ioengine=libaio --direct=1 --name=write_latency --filename=$MOUNTPOINT/fiotest --bs=4K --iodepth=4 --size=$FIO_SIZE --readwrite=randwrite --time_based --ramp_time=2s --runtime=15s

EBS has significantly lower latency both EFS and FSx, which makes sense because EFS and FSx both require larger network hops to access data.

| Driver | Average Read Latency (usec) | Average Write Latency (usec) |

|---|---|---|

| EBS gp3 | 478.42 | 680.48 |

| EBS io2 | 207.72 | 261.23 |

| EFS | 2736.43 | 10118.31 |

| FSx for Lustre | 2945.06 | 3412.69 |