Note: To make this easier to read (and write), h1 may be used in place of HTTP/1, and h2 may used in place of HTTP/2.

HTTP/1 has a long and storied history. Originally developed as a sixty page specification documented in RFC 1945, it was designed to handle text-based pages that leverage hypermedia to connect documents to each other. Typical web pages would kilobytes of data. For example, the first web page was a simple text file with web links to other text documents. Now, the web is made up of media-rich sites containing images, scripts, stylesheets, fonts, and more. The size of a typical web page is measured in megabytes rather than kilobytes, and the number of requests required to assemble a full page can be over one hundred. The reality of how web pages are built today does not match the reality that HTTP/1 was designed to support.

Problems with HTTP/1

The web was built on h1, but it is not well-suited to the world we live in today, where where web pages contain more bytes, more objects, and more complexity, with no end in sight. Pushing h1 to the edges of its design has given us significant performance and usability problems. Unfortunately, some flaws in h1 cannot be worked around, and they continue to plague web development today.

Head of line blocking

In h1, the protocol allows you to send a request while waiting for the response of a previous request. Called “pipelining”, this would allow browsers to request a web page, along with any CSS or Javascript required to render the page, all at once. The server can begin responding to these requests all at once, but the synchronous nature of HTTP’ request/response workflow must block to receive responses request-response workflow must block to receive responses one at a time, in the order that they were sent. This is called “head of line blocking”. One workaround to the head of line blocking problem is to open more than one TCP connection to the server, which is why browsers today typically allow six connections to the same domain. This workaround helps address the symptom, but does not solve the problem.

Inefficient use of TCP/IP

TCP is designed to be reliable over poor network connections by using the TCP “congestion window”, which tracks the number of packets that can be sent without them being acknowledged as received. For example, if the congestion window is set to one, then the client sends one request, receives one request, and then sends the next. To increase the bandwidth of the connection, TCP sends one additional unacknowledged packet for every acknowledged packet, until it reaches maximum bandwidth. Each TCP connection has to go through this same slow start procedure before operating at peak performance. If we try and scale HTTP to handle the large number of resources that make up a typical web page by increasing the number of TCP connections, each connection has to suffer through the same slow start algorithm before reaching optimal performance. This problems is exacerbated by distributing web pages over multiple domains and using a new TCP connection for each domain. With many web pages needing over 100 HTTP requests to render a full page, this inefficiency is becomes significant at web scale.

Header data duplication

Each HTTP request includes a set of HTTP headers. These headers are full text strings and can include cookies (which can be quite large). Because HTTP is fully stateless, each request must include the entire set of HTTP headers with each request. Throughout the lifetime of a user session on a web page, this results in a lot of duplicated data. Workarounds for this include serving images from a cookie-less domain, which increases the complexity of development and deployment.

The HTTP/2 Solution

HTTP/2 is designed to solve all of the design deficiencies of HTTP/1. It uses a multiplexed TCP/IP connection to make multiple HTTP requests at the same time, solving the head of line blocking problem. It reuses a TCP/IP connection for multiple requests, limiting the overhead of creating and destroying many connections. It leverages header compression and deduplication to both limit the amount of duplicate data sent through HTTP headers, and compress the Header data that is sent. Together, the design of HTTP/2 provides significant performance benefits, and it provides significant usability benefits for developers by reducing the number of workarounds and kludges needed to develop high performance websites.

An Overview of the HTTP/2 Protocol

At the core of all performance enhancements of HTTP/2 is the new binary framing layer, which dictates how the HTTP messages are encapsulated and transferred between the client and server.

Whereas h1 is text delimited, h2 is framed, meaning that a chunk of data

(a message) is divided into a number of discrete chunks, with the size of

the chunk encoded in the frame. The following diagram, from Introduction

to

HTTP/2,

shows where h2 sits on the networking stack, and shows how an h1 request

is related to an h2 HEADERS frame and DATA frame.

h2 is an application-level protocol that operates over a TLS connection. TLS is in turn built on top of the TCP/IP networking stack. With h1, a separate TCP/IP connection is used for each request. In contrast, h2 uses the same TCP/IP connection for multiple requests. In addition, these requests can be parallelized (multiplexed) so that a single TCP/IP connection can process many separate HTTP requests concurrently. In the following figure, from Introduction to HTTP/2, we see how a single TCP/IP connection is divided into multiple Streams, where each stream contains an HTTP request message and an HTTP response message. These messages can contain one more more frames.

In summary, h2 is made up of the following terms:

- Stream: A bidirectional flow of bytes in a TCP/IP connection. A stream may send one or more messages.

- Message: A sequence of frames that create an HTTP request or response.

- Frame: The actual data being sent, along with the stream it belongs to.

The rest of this article will map these terms to the h2 implementation.

Establishing an HTTP/2 Connection

HTTP/2 (h2), like HTTP/1, is an application-layer protocol that runs on top of a TCP connection and uses the same “http://” and “https://” URI schemes as used by HTTP/1. Because h2 uses the same scheme as h1, it is easy for applications to start using h2 with minimal changes. It also means that clients and servers need to negotiate which protocol to use before simply sending h2 data over the wire.

The h2 specification, allows for you to use HTTP/2 over an unsecure “http://” scheme, but browsers have not implemented this (and most do not plan to). I will therefore focus on describing the negotiation of an HTTP/2 exchange over a secure TLS connection. In fact, leveraging TLS provides an existing mechanism for negotiating the communication protocol used by the connection, the Application-Layer Protocol Negotiation (ALPN) extension. ALPN is a TLS extension specifically built for doing application layer protocol negotiation over a TLS connection.

ALPN allows the application layer to negotiate which protocol should be performed over a secure connection in a manner that avoids additional round trips and which is independent of the application layer protocols.

— Application-Layer Protocol Negotiation, Wikipedia

ALPN includes the protocol negation within the exchange of the TLS handshake. The first step of establishing a TLS connection is to exchange what are called “hello messages” that allow the client and server to “agree on algorithms, exchange random values, and check for session resumption”. With ALPN, the client sends a list of supported protocols to the server as part of the client’s hello message, and the server selects a protocol from this list and sends it back to the client as part of the server’s hello message. The canonical source for ALPN is RFC 7301.

In addition to agreeing on h2 is the protocol for the TLS connection, the

client and the server must send a pre-defined “connection preface” as

a final confirmation of the protocol, and to establish any initial

settings for the h2 connection. The client begins by sending the string

PRI * HTTP/2.0\r\n\r\nSM\r\n\r\n as the first data of the TLS

connection. The client must follow this string with an h2 SETTINGS

frame. The server responds with a SETTINGS frame. From this point

forward, a valid HTTP/2 connection is established. It’s worth noting that

the client can send data immediately after sending their connection

preface to avoid latency. This data may turn out to be invalid if the

client and server cannot complete the connection.

In summary, establishing an HTTP/2 connection requires:

- Establishing a TLS connection

- Negotiating h2 as the protocol for the TLS extension using ALPN

- The client sending (and server receiving) an h2 connection preface and SETTINGS frame

- The server sending (and client receiving) an h2 SETTINGS frame.

HTTP/2 Frames

Whereas h1 is text delimited, h2 is framed. One of the most important features of framing is letting a server know ahead of time how much content to expect. At first glance, this may seem like a small improvement, but it allows us to vastly simplify the server implementation. For example, one big advantage of knowing the frame length is the ability to interleave and multiplex requests and responses. With h1, you need to go through a complete request and response cycle individually because you don’t know how much additional data is coming from the same request. With h2, know the size of a frame allows us to lift this restriction.

An h2 frame has the following format (provided directly from RFC 7540). The number alongside each portion of the frame is the number of bits dedicated to that field.

+-----------------------------------------------+

| Length (24) |

+---------------+---------------+---------------+

| Type (8) | Flags (8) |

+-+-------------+---------------+-------------------------------+

|R| Stream Identifier (31) |

+-+-------------------------------------------------------------+

| Frame Payload (0...) ...

+---------------------------------------------------------------+

- Length: 24 bits storing the length of the frame payload

- Type: 8 bits storing the type of this frame. There are different frame types that serve different purposes. Encoding the type as part of the frame allows the client and server to understand the semantics of the incoming payload and parse it appropriately.

- Flags: 8 bits storing any boolean modifiers that apply to this frame type. These modifiers are specific to the frame type and control the set of options that

- R: 1 bit reserved for future use.

- Stream Identifier: 31 bits that uniquely identify each stream of this connection.

- Frame Payload: A variable length field containing the actual payload for this frame. The structure and content of the payload is dependent entirely on the frame type.

Frame Types

There are several different frame types that each serve a specific purpose. This section lists the frame types necessary for an HTTP request/response interaction. The full list of frame types is listed in RFC 7540.

SETTINGS

The SETTINGS frame is used by both clients and servers to specify

connection-level parameters that are applied to all streams that make up

the connection. A common use case for SETTINGS is advertising flow

control requirements.

HEADERS

The HEADERS frame is used to open a stream for upcoming data, and serves

the additional purpose of sending HTTP headers. In contrast to h1, h2 HTTP

headers are not sent with every request. Rather, they are sent once to

begin a stream, and the headers specified in the HEADERS frame are used

throughout the lifetime of the stream.

DATA

DATA frames encode the application payload data. With h2, DATA frames

are used to transmit the HTTP request and response payloads.

Streams

One of the key benefits of h2 is the ability to multiplex multiple requests and responses over the same TCP/IP connection. This is facilitated with streams. Each stream is an independent and bidirectional sequence of frames exchanged between client and server. You can think of a stream as a series of frames that together create an HTTP request/response pair. For each new request made by the client, we initiate a new stream and give it a unique identifier. The server will respond to the stream using the same identifier.

A new stream is initiated by sending a HEADERS frame. Each subsequent

stream is started with a new HEADERS frame. If you have a lot of

headers, you can send additional ones using a CONTINUATION frame. The

end of headers is signified by setting the END_HEADERS bit on the

Flags field of the frame. This bit signals that no more headers will

arrive and that the stream is now “open” for DATA frames.

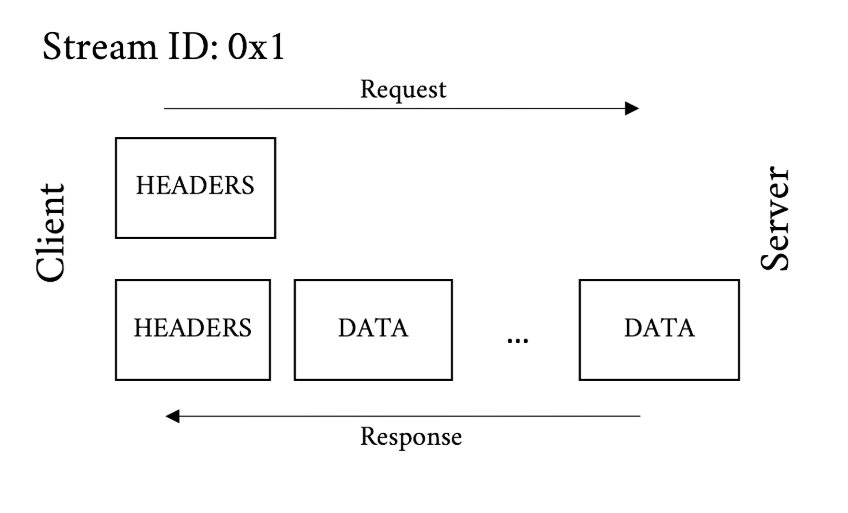

The following figure, from Learning

HTTP/2 shows an h2 GET

request. The request is part of a stream with identifier 0x1. The first

message is an HTTP client request, comprised simply of a HEADERS frame.

The server receives this frame and, from responds with a response message,

comprised of a HEADERS frame followed by DATA frames that make up the

response payload.

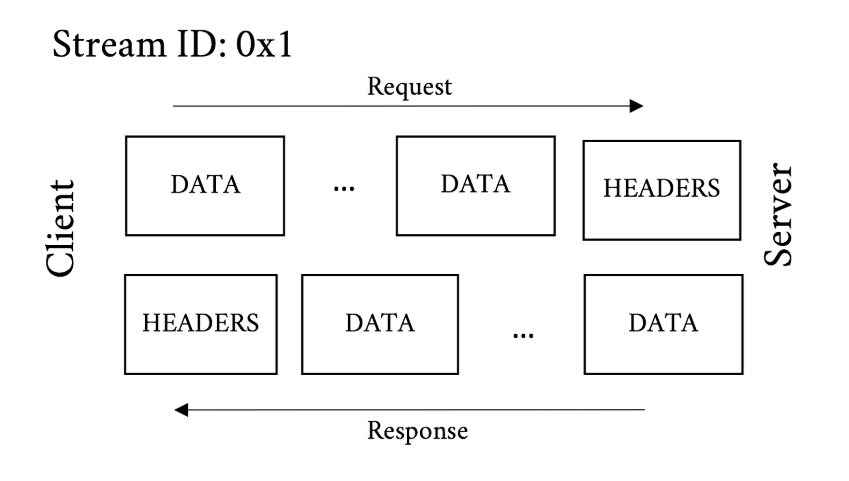

A POST request is similar, in the following figure, also from Learning

HTTP/2, the initial

request from the client to server is composed of a HEADERS frame,

followed by several DATA frames that make up the POST request body. The

response from the server is a HEADERS frame, followed by multiple DATA

frames for the response payload.

Header Compression (HPACK)

Headers in h2 follow the same semantics as h1: a key with one or more values. In h1, each request is stateless and a client must send the full set of HTTP headers with each request, even if that data has already been sent on previous requests. With h2, headers are only sent once to open a stream to limit the amount of header information sent. Additionally, h2 introduces header compression to further reduce the amount of data transmitted over-the-wire.

The HPACK compression algorithm, developed alongside HTTP/2, securely

compresses headers using a lookup table. For example, a client can send

a HEADERS frame saying that the cookie header should have value foo.

Before sending the frame, the client adds this information to its local

lookup table and records the pointer to the appropriate values in that

table. Subsequent requests to the same server that include this header

value can send the pointer to the location of the header in the lookup

table instead of sending the entire header. As the server receives

HEADERS frames, it builds up a copy of the same lookup table that exists

on the client. Subsequently, if the server receives a request with

a HEADERS frame with pointers, it checks its own copy of the lookup

table to retrieve the correct header value.

This explanation of header compression is simplified. details on HPACK are found in RFC 7541

Example: A full HTTP request/response

To illustrate how h2 is used in the real world, we can work through the

example of sending an HTTP request and receiving an HTTP response. First,

a client and server initiate a TLS connection over TCP/IP and negotiate

the use of the h2 application protocol. Once this connection is

established, HTTP messages (requests or responses) can be sent. An HTTP

message (request or response) consists of:

one HEADERS frame (followed by zero or more CONTINUATION frames) containing the message headers,

zero or more DATA frames containing the payload body, and

optionally, one HEADERS frame, followed by zero or more CONTINUATION frames containing the trailer-part, if present.

An HTTP request/response exchange fully consumes a single stream. An HTTP response is complete after the server sends – or the client receives – a frame with the END_STREAM flag set. This closes the stream, and any additional HTTP request/response exchanges take place on a new stream. This new stream shares the same TCP/IP connection.

Additional Features of HTTP/2

Flow control

Once you have multiple streams of data being sent over the same TCP/IP connection, you introduce the potential for contention. h2’s flow control policies are used to make sure that multiple streams on the same connection do not interfere with one another. Flow control is useful for congestion control, limited bandwidth connections, or making data chunks a manageable size. It can also be used by proxies to negotiate the best throughput given the current load on the proxy.

In h2, the receiver of data advertises its flow control limit for the

stream. They do this by sending a WINDOW_UPDATE frame on the stream that

dictates how many bytes of data they want to receive. RFC

7540 specifies that senders MUST

respect the flow control limits set by the data receiver. In this manner,

clients, servers, proxies, and intermediaries all set their own flow

control window from the perspective of the data they are ready to receive,

and each of these components respect the limits imposed by others whenever

they send data.

Priority

It’s possible that not all streams have the same priority. For example, when a browser opens a web page, it will open a stream to request the HTML, and then separate streams to request CSS, javascript, images, or anything else required to render the page. In some pages, the browser may make up to 100 requests for content, and the server will not know how to prioritize them. In h2, you can specify explicit stream dependencies that allow clients to weight the importance of individual streams. The server is responsible for satisfying these dependencies.

For example, an HTML page that is dependent on critical CSS and javascript

can add those objects as dependencies of the HTML using the HEADERS and

PRIORITY frames to communicate this dependency to the server. Upon

receipt of this priority information, the server should send data to the

client on the correct order.

Server Push

In addition to prioritizing streams, h2 allows a server to push data to

a client that the client hasn’t asked for yet. Returning to our example of

loading a web page. If the server receives a request from a client for

index.html, it can assume that the client will also be making requests

for the CSS required to render the page. Rather than waiting for that

explicit request, the server can choose to start sending the data. In an

ideal scenario, when the client makes the request for the CSS, it will

already be available in the client’s cache. Server push requires

that any pushed resources MUST be cacheable.

To push an object, the server constructs a PUSH_PROMISE frame, setting

the stream ID. The PUSH_PROMISE frame also has header data that is

similar to what would exist in a HEADERS frame. After the PUSH_PROMISE

frame is sent, the server can send additional DATA frames to the client.

More References

This article provides an introduction to HTTP/2, and should provided a good overview for someone wanting to learn more about the protocol. If you want to learn more, there are several great resources to choose from: