The transmission control protocol (TCP) does one job very well — it creates an abstraction that makes an unreliable channel look like a reliable network. For applications built over an unreliable network like the Internet, TCP is a godsend that hides a lot of the inherent complexity in building networked applications. A laundry list of TCP features that application developers rely on every day includes: retransmission of lost data, in-order data delivery, data integrity, and congestion control. This article provides an introduction TCP, describing the structure of TCP segments, how TCP connections are established, and the algorithms that govern the flow of data between senders and receivers.

TCP Segments

TCP divides a stream of data into chunks, and then adds a TCP header to

each chunk to create a TCP segment. A TCP segment consists of a header and

a data section. The TCP header contains 10 mandatory fields, and an

optional extension field. The payload data follows the header and contains

the data for the application. The following figure from RFC 793 shows

the format of a TCP segment, where each - represents one bit.

0 1 2 3

0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Source Port | Destination Port |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Sequence Number |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Acknowledgement Number |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Data | |U|A|P|R|S|F| |

| Offset| Reserved |R|C|S|S|Y|I| Window |

| | |G|K|H|T|N|N| |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Checksum | Urgent Pointer |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Options | Padding |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Data |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

- Source Port (16 bits): The client’s port number.

- Destination Port (16 bits): The server’s port number.

- Sequence Number (32 bits): A sequence number used for guaranteeing packet order.

- Acknowledgement Number (32 bits): An acknowledge number notifying senders of the receipt of TCP segments.

- Data Offset (4 bits): The size of the TCP header (i.e. where the data begins).

- Reserved (3 bits): Set to zero. Reserved for future use.

- Flags (9 bits): Flags set TCP control options used to alter the

connection. For example, the

SYNflag is used to synchronize on sequence numbers, and theFINflag is used to close the connection. The full list of flags is available on Wikipedia. - Window (16 bits): The receive window. The number of bytes that the sender of this segment is willing to receive. A key feature of TCP flow control.

- Checksum (16 bits): A 16-bit checksum used for error-checking.

- Urgent pointer (16 bits): If the sender sets the

URGflag, then this 16-bit field is an offset from the sequence number indicating the last urgent data byte. Data received with anURGflag is typically placed in a separate incoming buffer to be delivered to the application, where it is up to the application to use this data correctly. For example, FTP and Telnet use theURGflag when sending user commands so that the server can prioritize user commands over in progress data transfer. - Options (Variable 0–320 bits, divisible by 32): Options are — as the name would suggest — optional. One example option is the Maximum Segment Size. By setting this option, a sender advertises the maximum size of segments that it can process. The receiver must honour this value when sending segments.

- Padding (Variable 0–320 bits, divisible by 32): Zeroes. Padding ensures that the TCP header ends and data begins on a 32 bit boundary.

- Data (Variable length): The application specific payload data for this TCP segment.

Establishing a TCP Connection — The Three-Way Handshake

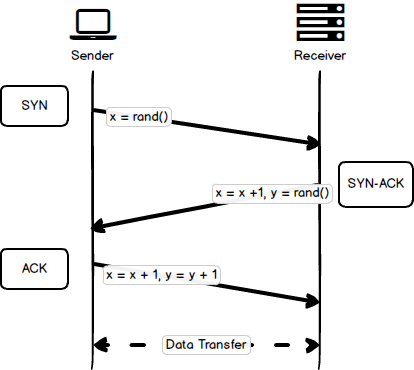

All TCP connections begin with a connection algorithm called the three-way handshake (because it requires three network requests to successfully complete). During this handshake, both the client and the server must agree on a starting packet sequence number that is used to guarantee ordering of future packets. In point form, the handshake looks like this:

- SYN

- The client picks a random sequence number \(x\).

- The client sends a packet with the

SYNflag set and theSequence Numberfield set to \(x\).

- SYN-ACK

- The server increments \(x\) by one.

- The server picks a random sequence number \(y\).

- The server sends a packet with the

SYNandACKflags set, theSequence Numberfield set to \(y\), and theAcknowledgement Numberfield set to \(x\).

- ACK

- The client increments increments \(x\) and \(y\) by one.

- The client sends a packet with the

ACKflag sent, theSequence Numberfield set to \(x\), and theAcknowledgement Numberfield set to \(y\).

After the handshake is complete, a client can start sending data packets immediately.

The following figure shows a successful three-way handshake. At the end of the handshake, the client begins sending application data.

Sending Data

After a TCP connection is established using the three-way handshake, TCP segments can be exchanged between the client and server. To guard against the failures introduced by the unreliable network, TCP uses sequence numbers to verify the correct delivery and ordering of TCP segments. The host on either side of a TCP session maintains a 32-bit sequence number it uses to keep track of how much data it has sent. This sequence number is included on each transmitted packet, and acknowledged by the opposite host as an acknowledgement number to inform the sending host that the transmitted data was received successfully.

A fundamental notion in the design is that every octet of data sent over a TCP connection has a sequence number. Since every octet is sequenced, each of them can be acknowledged.

More precisely, when a client sends a TCP segment with an acknowledgement number \(x\), it means that the client has correctly received all data up to \(x-1\). TCP uses this mechanism to perform straight-forward duplicate detection of retransmitted segments. To do so, both sides of the connection keep track of a few variables:

SND.UNA: oldest unacknowledged sequence numberSND.NXT: next sequence number to be sentSEG.ACK: next sequence number expected by the receiving hostSEG.SEQ: first sequence number of a segmentSEG.LEN: the number of octets of data in the segmentSEG.SEQ+SEG.LEN-1: last sequence number of a segment

When the sender creates a segment and transmits it, the sender advances

SND.NXT. When the receiver accepts a segment it advances RCV.NXT and

sends an acknowledgement. When the sender receives an acknowledgement it

advances SND.UNA. The extent to which the values of these variables

differ is a measure of the delay in the communication. If the data flow is

momentarily idle and all data sent has been acknowledged then the three

variables will be equal.

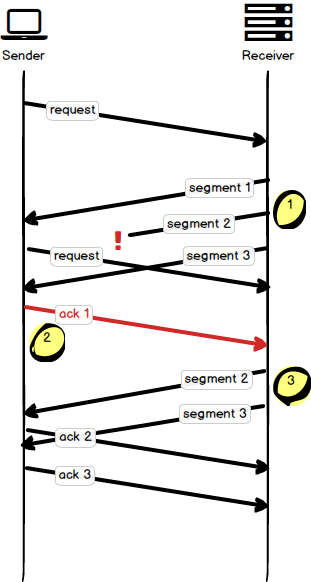

The following figure provides a visual example of data exchange between a client and server.

The client makes a request to the server for data, and the server creates a response that is divided into three TCP segments. Segment one is returned to the client without issue. Segment two (1) is dropped somewhere by the network, and segment three arrives without issue. The client, after receiving segment one, issues an acknowledgement for that segment. The client then receives segment three out of order, and sends a duplicate acknowledgement (2) for segment one to signal that it has not received data for segment two yet. The server responds to this duplicate acknowledgement (3) by resending both segments two and three, which are acknowledged by the client.

With this algorithm, the serve has to send segment three twice, even though it was already successfully delivered, RFC 2081 improves the efficiency of TCP by allowing a client to say which data it has already received during an acknowledgement. The server uses this information to resend only the missing data.

Closing a Connection

To close a TCP connection, a sender transmits a packet with the FIN flag

set, indicating that the sender has no more data it wishes to send. After

receipt of a FIN segment the receiver should refuse any additional data

from the client. Closing a TCP connection is a one-way operation — both

sides of the connection must choose to close independently. The sender who

closes the transmission must continue to receive data until the receiver

also decides to close the connection.

Congestion Avoidance and Control

The basic TCP protocol as described was originally codified in 1981. In 1984, the Ford company found some problems using TCP over wide-area networks and documented those issues in RFC 896 (later incorporated into RFC 1122 and RFC 6633).

In heavily loaded pure datagram networks with end to end retransmission, as switching nodes become congested, the round trip time through the net increases and the count of datagrams in transit within the net also increases. This is normal behavior under load. As long as there is only one copy of each datagram in transit, congestion is under control. Once retransmission of datagrams not yet delivered begins, there is potential for serious trouble.

— John Nagle, RFC 896

In other words, if the roundtrip time between two nodes exceeds the maximum retransmission interval for a host, that host will resend the packet because it thinks that it was lost. The effect is that more and more copies of the same data will be sent into the network. Eventually all of the network routers and switches will be flooded with packets and all data being sent into the network will be dropped. This problem was called “congestion collapse”, and is fixed by introducing flow control, congestion control, and congestion avoidance.

Flow Control

The Window field in a TCP segment is the number of bytes that the sender

of this segment is willing to receive in a response. Each side of a TCP

connection can control how much data it is willing to receive by setting

this receive window. If a sender is under heavy load, it can set the

window to a low value to decrease pressure on itself, alternatively, if

the sender is under light load and can process more information, it can

advertise a high receive window indicating that it is ready to receive and

process more information. Senders can also advertise a receive window of

zero, indicating that the connection cannot keep up and needs time to

clear the data in its buffer. The receive window size is transmitted as

part of every ACK packet, allowing both sides of a connection to adjust

the amount of data they can receive, optimizing their processing capacity.

In the original TCP specification, the receive window was given 16 bits of

data, which implies a maximum window size of \(2^{16}\) or 65,535 bytes.

This upper bound, though large at the time, doesn’t allow high bandwidth

networks to reach optimal performance. RFC 1323 addresses this

problem by introducing an option allowing clients and servers to scale the

window size, reaching maximum window sizes of up to a gigabyte. During the

three-way handshake, nodes can set the window scaling option representing

the number of bits to left-shift the 16-bit window size for future

ACKS.

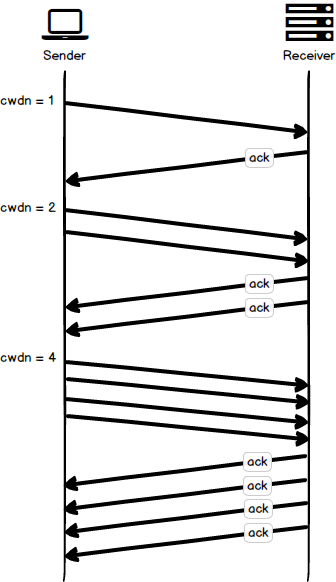

Slow Start

Flow control helps senders and receivers match bandwidth rates to avoid overloading one another, but there is still a possibility of overloading the underlying network that transmits packets between senders and receivers. Slow start, described in RFC 2581 forces TCP senders to set a congestion window variable limiting the amount of data a sender can push into the network before receiving an acknowledgement from a receiver. Slow start also prescribes an algorithm for senders to carefully probe the network to see how much data they should be sending.

At the beginning of a new network connection, there is no way for a sender to know the available bandwidth of the network. TCP Slow Start is conservative and allows a sender to transmit four TCP segment before receiving an acknowledgement. After an acknowledgement is received, the sender can transmit eight TCP segments. This pattern continues so that for every acknowledged packet, two new packets can be sent, up to the receive window limit specified by the receiver. From this, we can derive a new rule for TCP senders: the maximum amount of data that is unacknowledged is the minimum of the receive window and the congestion window.

The following figure shows the TCP Slow Start algorithm with an initial congestion window of one. After each successful roundtrip, the congestion window is doubled.

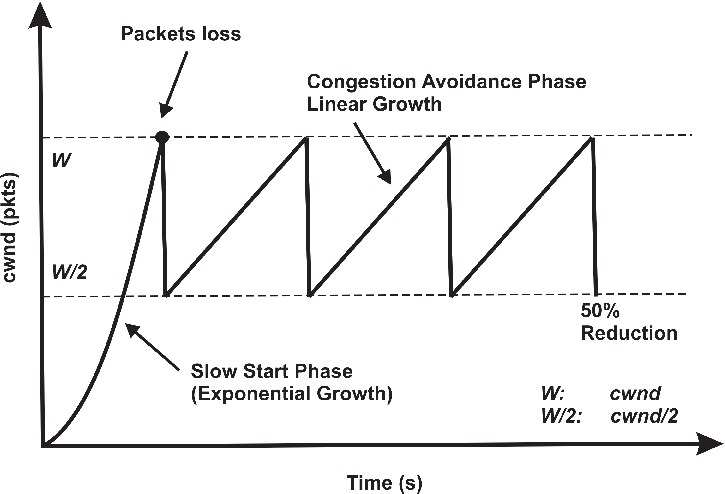

Congestion Avoidance

The TCP protocol can only ever estimate the bandwidth available in the underlying network, and must use packet loss and the congestion window variables to regulate packet flow. The slow start algorithm increases the amount of data sent to a receiver until either the receiver’s window is reached, or packet loss occurs. If packet loss occurs, the TCP congestion avoidance algorithm starts. Congestion avoidance is designed with the assumption that packet loss is an indicator of an overloaded network, and the solution is to limit the amount of data sent by resetting the congestion window variable that senders use to regulate data flow.

Once the congestion window is reset, congestion avoidance specifies its own algorithms for how to grow the window to minimize further loss. At a certain point, another packet loss event will occur, and the process will repeat once over.

When packet loss does occur, TCP undergoes a congestion detection phase, followed by a congestion avoidance phase. In the congestion detection phase, the congestion window is reduced, and in the congestion avoidance phase the congestion window is slowly increased. The original TCP algorithm specified a multiplicative decrease, halving the size of the congestion window when congestion is detected, followed by an additive increase, that slowly increases the size of the congestion window by one each round trip.

The following figure, from ResearchGate, shows the varying size of the congestion window in response to packet loss and the multiplicative decrease, additive increase algorithm.

The congestion detection and avoidance algorithm is a key feature of TCP, and its implementation has a large effect on network performance. In many cases, this algorithm is too conservative, and new algorithms have been developed (up to 13 at this point). Congestion detection and avoidance are still an area of ongoing research.

The Core Principles of TCP

TCP is a wonderful thing. It completes a difficult task in a way that is completely transparent to the application. And even though it is complex, the core principles can be easily explained. In particular, the TCP protocol can be distilled down to a few items:

- A three-way handshake establishes the parameters and settings for every new connection.

- Slow-start is applied to avoid overloading the network.

- Flow and congestion control regulate the connection throughput.

There are a lot of details in each individual item, but the essence of the protocol remains simple. TCP has a long history, and because it is a cornerstone of the internet, it is an area of continued interest and research, culminating in many RFCs and a few good books:

- TCP/IP Illustrated

- High Performance Browser Networking

- RFC 793 — Transmission Control Protocol

- RFC 896 — Congestion Control in IP/TCP Internetworks

- RFC 1122 — Requirements for Internet Hosts – Communication Layers

- RFC 1323 — TCP Extensions for High Performance

- RFC 2081 — TCP Selective Acknowledgment Options

- RFC 2581 — TCP Congestion Control

- RFC 3168 — The Addition of Explicit Congestion Notification (ECN) to IP

- RFC 3540 — Robust Explicit Congestion Notification (ECN) Signaling with Nonces

- RFC 6633 — Deprecation of ICMP Source Quench Messages

- RFC 6937 — Proportional Rate Reduction for TCP

- Transmission Control Protocol Segment Structure