Engineering, for much of the twentieth century, was mainly about artifacts and inventions. Now, it’s increasingly about complex systems. As the airplane taxis to the gate, you access the Internet and check email with your PDA, linking the communication and transportation systems. At home, you recharge your plug-in hybrid vehicle, linking transportation to the electricity grid. At work, you develop code, commit it to a repository, run test cases, deploy to production, and monitor the result. Today’s large-scale, highly complex systems converge, interact, and depend on each other in ways engineers of old could barely have imagined. As scale, scope, and complexity increase, engineers consider technical and social issues together in a highly integrated way as they design flexible, adaptable, robust systems that can be easily modified and reconfigured to satisfy changing requirements and new technological opportunities.

The need to understand clearly how we keep these complex systems running requires rethinking what we mean by a “system” in the first place. The framework used by David D. Woods, professor of Integrated Systems Engineering and author of Behind Human Error, can help us make sense of this problem by distinguishing between the system as percieved “above-the-line”, and the reality of the artifacts that encompass the system “below-the-line”.

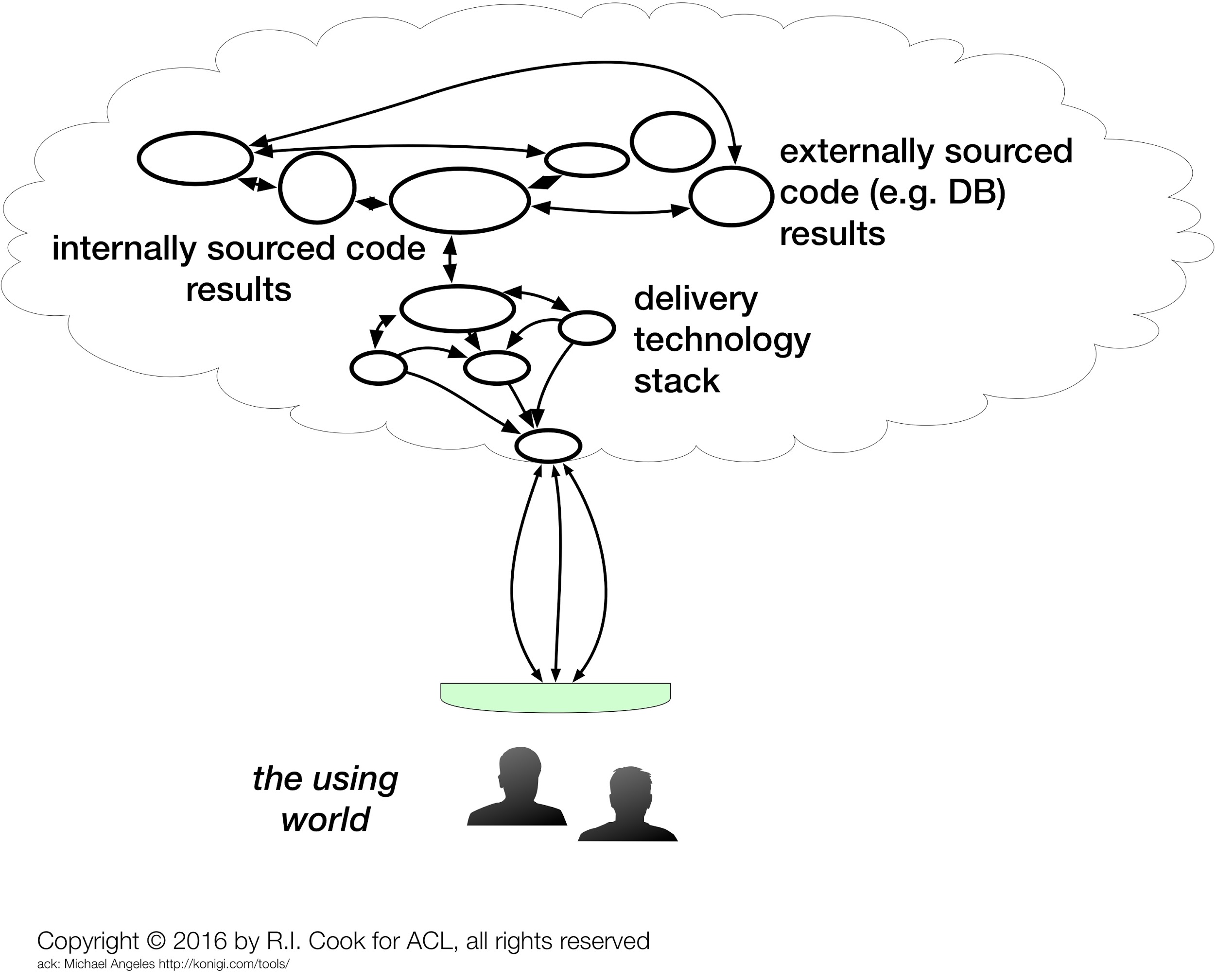

A naive engineer might consider “the system” to include solely the source code they write, build, and deploy to users. But this view is too simplistic. It doesn’t include the dependencies on third-party software or hardware such as databases, routers, and load balancers that are necessary to provide the full product or service to a customer. Figure 1 shows a more complete view of “the system”, including all of the components used by engineers, release managers, site reliability experts, even the external components provided by third-parties that take software from code commit to usable in production.

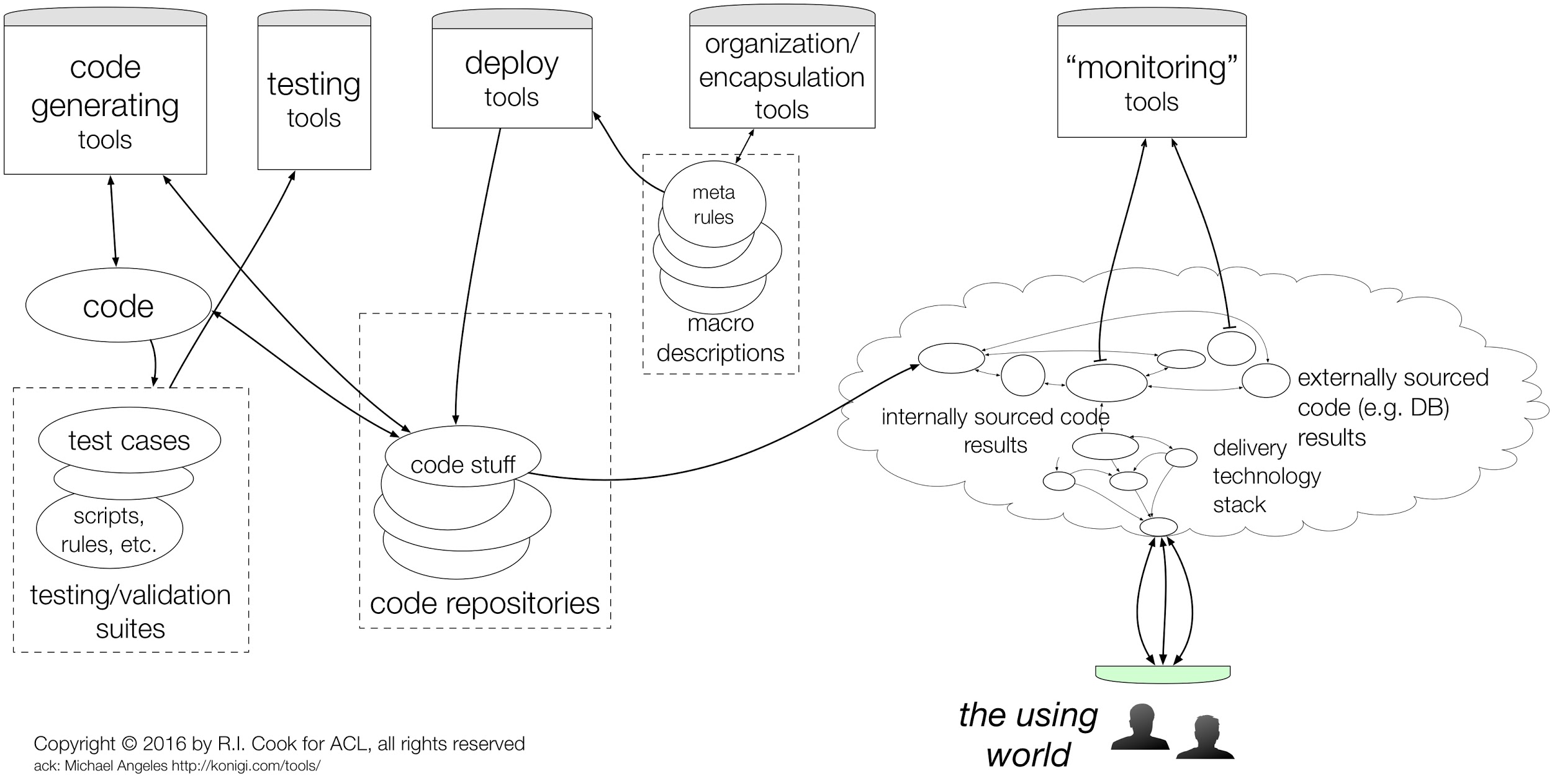

But even Figure 1 is too simplistic. Once a product or solution is deployed to customers, it requires further modification, maintainance, and updates. Figure 2 provides a more detailed view of the same system that includes the instrumentation, testing and deployment tools that are required to change a running system to meet the changing requirements of customers. This more detailed view entirely contains Figure 1, but adds deployment tools, testing tools, and tools for monitoring, observability, telemetry, and alerting used to validate the behaviour of the product or service.

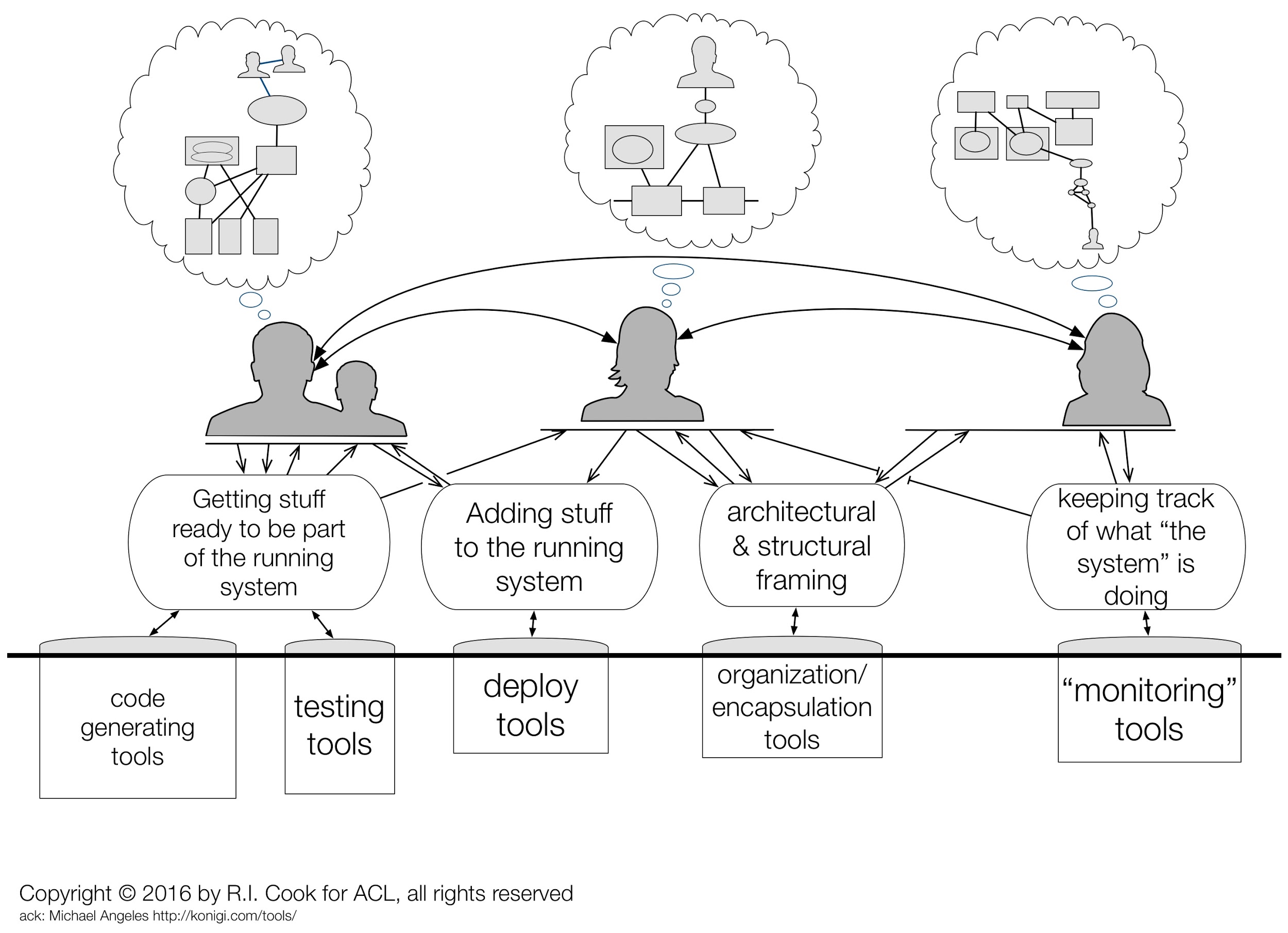

We can dive deeper. Figure 2 excludes the human element. All of the components in Figure 2 rely on people to build, maintain, repair, and operate them. These individuals do the mental work needed to track how the individual components and artifacts function and how they fail. They understand what is happening and what may hapen next. These concepts are incorporated into a mental model that each person holds in their head as an internal representation of the system. Each of these models is unique, but similar — the different models in the heads of people in Figure 3 are similar but not identical. As the world keeps changing, these representations become stale and in a fast-changing world, the effort of individuals required to keep this model up-to-date is daunting.

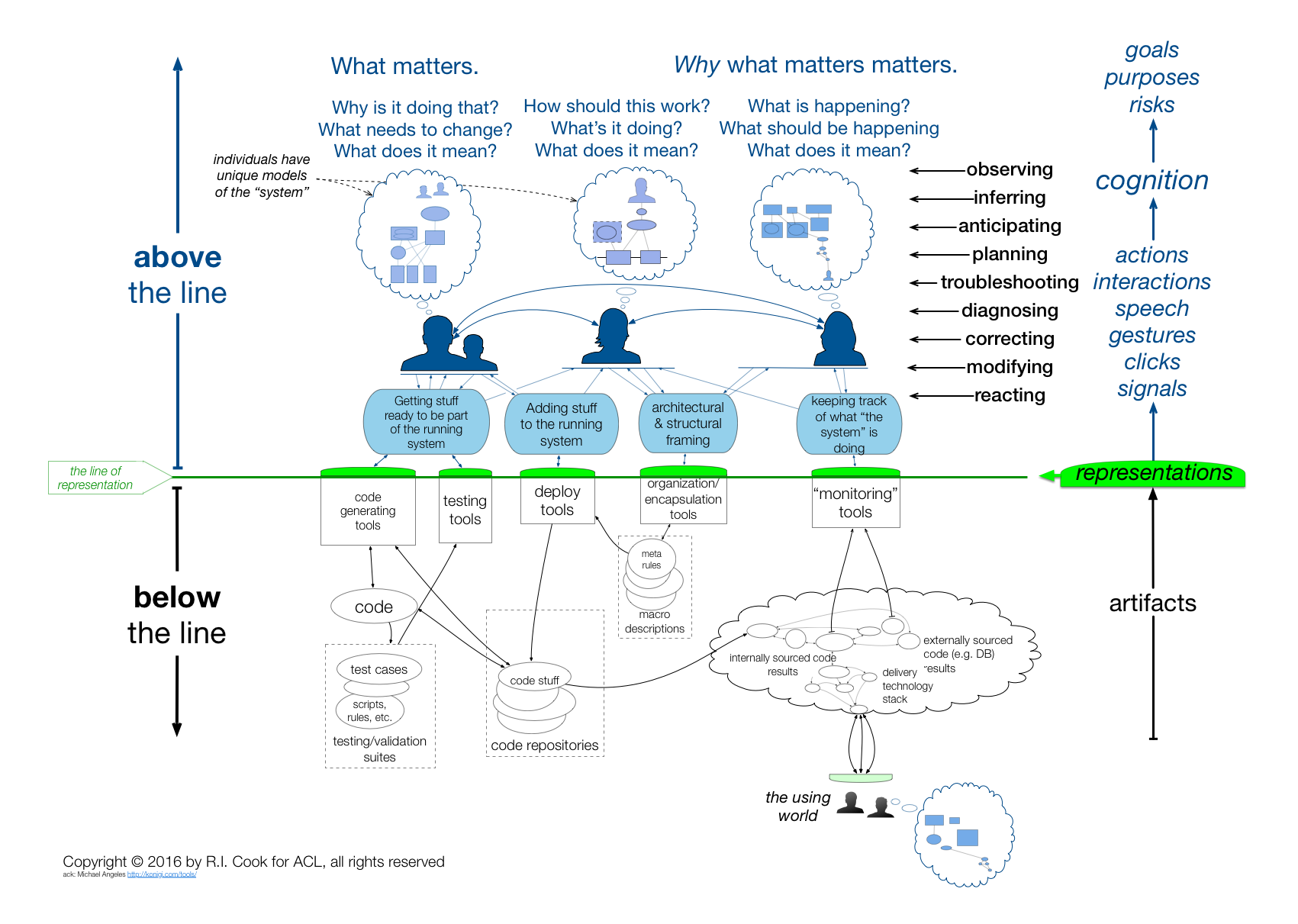

Lastly, we get to Figure 4 showing an even more inclusive view of the system. This view includes the people involved in creating, deploying, managing, changing, and operating the product or software and their mental models that allow them to reason about and perform their work.

The green line in this figure is the line of representation. The software and hardware “below-the-line” are the technical artifacts that cannot be seen or controlled directly by the people “above-the-line”. Instead, every interaction between people above-the-line and the running system below-the-line is mediated by screens, dashboards, keyboards, communication layers, and other interfaces. An important consequence of this is that the people interacting with a system are completely dependent on their mental model of the system that is influenced by the representation they can see — which is incomplete, out-of-date, or just wrong.

This leads us to two broad observations presented at the SNAFUcatchers workshop on coping with complexity:

- Individuals in different roles must develop and maintain good enough mental models comprehend and influence running systems.

- Individuals in different roles must develop and maintain good understanding of each others mental models to communicate and cooperate.

As the complexity of a system increases, the accuracy of any single [person’s] model of that system decreases rapidly.

— Woods’ Theorem

To deal with operating complex systems, we need to bridge the gap between our mental models of a system and the system itself. During an anomaly or an outage, this can be characterized as a debugging exercise, the essence of which is being able to ask and answer questions that bring our mental model closer to the actual system under observation. Observations about the system can bridge the gap between the mental model of a developer or operations expert and the system itself. But, like the proverbial horse being led to water, observations can lead a developer to the answer but can’t make them find it. This process still requires a robust mental model, a good sense of intuition, appropriate tooling and processes, minimal tech-debt, resilient engineering, and effective incident response. Improving a complex system like distributed software requires a systemic effort that encompasses all aspects of building software: code, process, people, users, and everything in between.

Systems engineering combines engineering with perspectives from management, economics, and the social science in order to address the design and development of the complex, large-scale, sociotechnical systems. Tools like a Design Structure Matrix provide a straightforward and flexible modeling technique that can be used for designing, developing, and managing complex systems, while the theory of Complex Adaptive Systems acknowledgtes that complex systems are dynamic and are able to adapt in and evolve with a changing environment. Modeling software as a complex adaptive system can help us understand how changes to this complex environment affect the development and delivery of software.

Resilience engineering aids systems in their ability to absorb changes and still exist “allowing it to continue operations after a major mishap or in the presence of a continuous stress.” The four capabilities of resilience provide a hint at how we can leverage the theory of resilience engineering in designing our systems. These capabilities are the ability to:

- Respond to events: how to respond to regular and irregular disruptions and disturbances by adjusting normal functioning. This is the ability to address the actual.

- Monitor ongoing developments: how to monitor that which is or could become a threat in the near term. The monitoring must cover both that which happens in the environment and that which happens in the system itself,i.e., its own performance. This is the ability to address the critical.

- Anticipate future threats & opportunities: how to anticipate developments and threats further into the future, such as potential disruptions, pressures, and their consequences. This is the ability to address the potential

- Learn from past failures and successes: how to learn from experience, in particular to learn the right lessons from the right experience. This is the ability to address the factual.

Although I don’t offer concrete actionables today, I am intrigued by how two fields adjacent to software development, systems engineering and resilience engineering, can support software engineers and organizational leaders in building robust distributed systems. It is important to realize that there is no separation between a system and its environment. Rather, a system is closely linked with all other related components and systems. For example, a car has interdependent components that must all simultaneously serve their function in order for the system to work. The higher-order function, driving, derives from the interaction of the parts in a very specific way. Similarly, software can viewed as a complex system requiring a systemic approach to design and implementation. The higher-order function, usable software, derives from the interaction of code, testing, deployment, monitoring, and the people, tools and processes we use at each step. To solve problems in such a complex environment, we need to begin thinking about the systemic problems of software using systemic solutions.

If a revolution destroys a government, but the systematic patterns of thought that produced the government are left intact, then those patterns will repeat themselves… There’s so much talk about the system. And so little understanding.

—Robert Pirsig, Zen and the Art of Motorcycle Maintenance